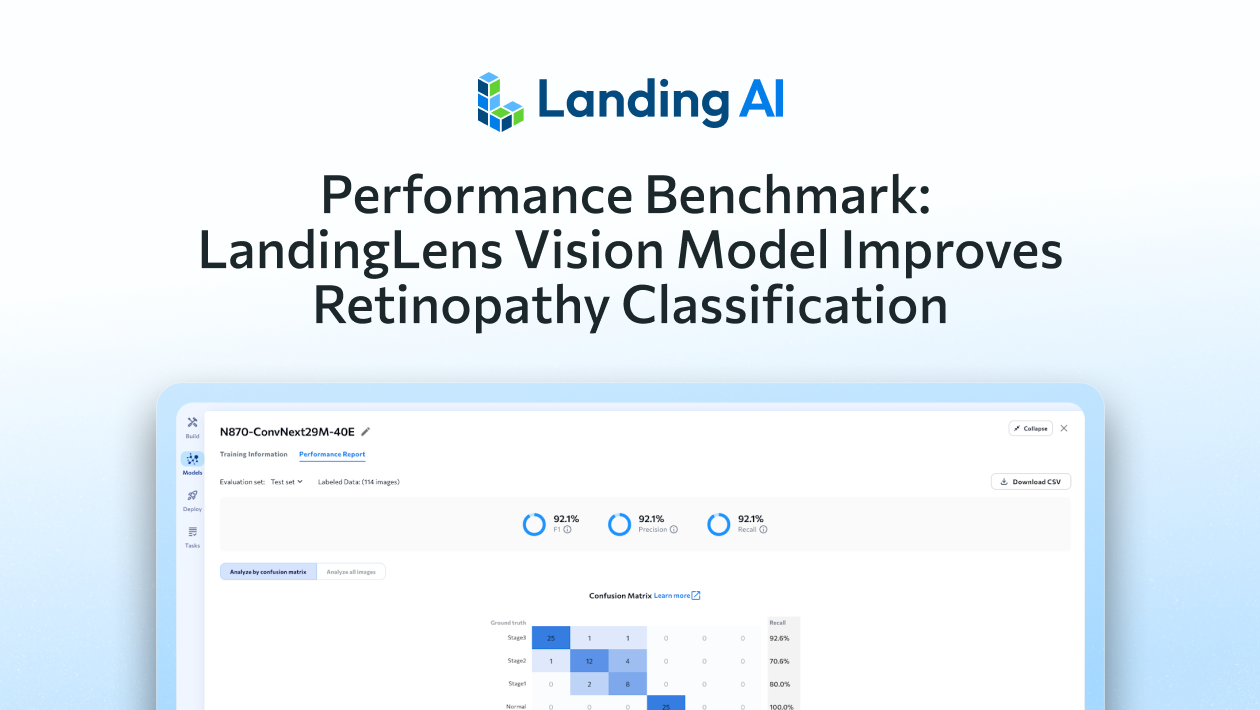

This article shares the results of a benchmarking study conducted with an open access fundus image dataset. Using the same starting images, same partitions, and same ground truth labels, LandingLens produced a multi-class classification model with an F1 score of 92.1% This is substantial improvement over the best F1 score of 83% shared by the authors who published the data set. This result was achieved in approximately 2 hours of work in LandingLens.

Retinopathy of Prematurity (ROP) is a potentially blinding eye disorder that primarily affects premature infants. ROP occurs due to abnormal growth of blood vessels in the retina. In premature babies, the retina’s blood vessels are not fully developed at birth. When exposed to high oxygen levels (such as in neonatal intensive care units), these vessels may grow abnormally, leading to complications.

ROP is classified into five stages, ranging from mild (Stage 1) to severe (Stage 5):

- Stage 1 & 2: Mild to moderate abnormal blood vessel growth; usually resolves on its own.

- Stage 3: Severe abnormal vessel growth; may need treatment.

- Stage 4: Partial retinal detachment.

- Stage 5: Complete retinal detachment leading to blindness.

In May of 2024, sixteen authors affiliated with the Shenzhen Eye Institute, Macao Polytechnic University, New York University, and the Zhongshan Ophthalmic Center published an open access data set of 1099 images from 483 premature infants. We sincerely thank them for their painstaking work gathering these images and for making them available for publication.

In their paper, A fundus image dataset for intelligent retinopathy of prematurity system, the authors share performance metrics from four AI vision models. This figure is a reproduction of Table 2, “The classification results of AI models,” from their paper. Notice that the best F1 score achieved is 82.81 in row 1. Notice also the performance of the ConvNeXt-T model architecture in row 3. Out of the four methods in the table, this one is the most similar to the LandingLens backbone architecture used in our benchmark tests.

Process Overview

We downloaded the dataset and examined whether the authors had provided specific partitions for the 1099 images. Discovering that they had not, we proceeded to upload the images to LandingLens in bulk without assigning partitions.

During the upload and data registration process, LandingLen detected seven images as duplicates so our findings use 1092 unique images. LandingLens is able to detect duplicate images, even if they have different filenames.

Using the Custom Training option, we configured an 80/10/10 ratio for the train, dev and test sets. We selected a ConvNeXt model backbone, kept the images at 512 x 512 pixels, and selected four of the available data augmentations—horizontal flip, vertical flip, random rotate, and hue saturation value.

The custom configuration took about 4 minutes to set up and the actual model training ran for 9 minutes using cloud GPUs provided by LandingAI to users of the web-based version of LandingLens.

Screenshot 1: Data summary showing the distribution of labels and splits

Screenshot 2: Training configuration

Overview of Results

The LandingLens deep learning model delivered outstanding performance in this five-class classification task, achieving an impressive F1 score of 99% on the training set, 96% on the development set, and 92% on the test set. These results highlight the model’s strong ability to generalize beyond the training data while maintaining high accuracy across all phases. The consistent performance across datasets indicates that the model is well-trained and capable of making reliable predictions, making it a strong candidate for real-world deployment.

Screenshot 3: Test set (N=114) confusion matrix

There were nine misclassifications in the Test set which are the off-diagonal entries in the confusion matrix. It is good to see that where the model makes errors it is typically between adjacent stages: Stage 1<>Stage 2 or Stage 2<>Stage 3.

There is a detailed description of the data labeling process in the original Nature article. The publication states that, “It is worth noting that the classification result of each image may not be fully consistent with that by different ophthalmologists around the world even after going through the aforementioned steps. Even if all the ophthalmologists use the same ICROP criteria to classify fundus images, different ophthalmologists may provide different classification results using their visual judgment. Inconsistency of annotators in classifying Stage 1 and 2 ROP was most evident.”

In light of this statement, we viewed the confusion matrix for the training data and found that Stage 1<>Stage 2 was the only misclassification. This gave us added confidence in the quality of the model.

The LandingLens user interface makes it easy to review misclassifications with expert annotators.

Screenshot 4: Four test set images for which the ground truth is Stage 2 and the prediction is Stage 1

Summary

This benchmarking study demonstrates the effectiveness of LandingLens for a specific life sciences use case—Retinopathy of Prematurity (ROP). Using LandingLens’ custom training option with an optimized ConvNeXt model backbone, we created a five-class classification model with an F1 score of 92.1%—a significant improvement over the previous best-reported F1 score of 83% in the dataset’s original publication. Remarkably, this result was obtained with only 2 hours of work within the LandingLens platform.

The model is deployable to a cloud endpoint or can be run on an edge device.

Try LandingLens for free at app.landing.ai.