Agenda

- Use BeautifulSoup to locate the “Consolidated Financial Statements” PDF link on Apple’s FY2024 Q1 press‑release page.

- Use the ADE parse function from the Python library to extract key metrics—total revenue, net income and diluted EPS—from that PDF.

- Analyse and display the extracted data, calculating ratios like profit margin.

- Provide an end‑to‑end, runnable Jupyter notebook that automates this entire workflow.

Introduction

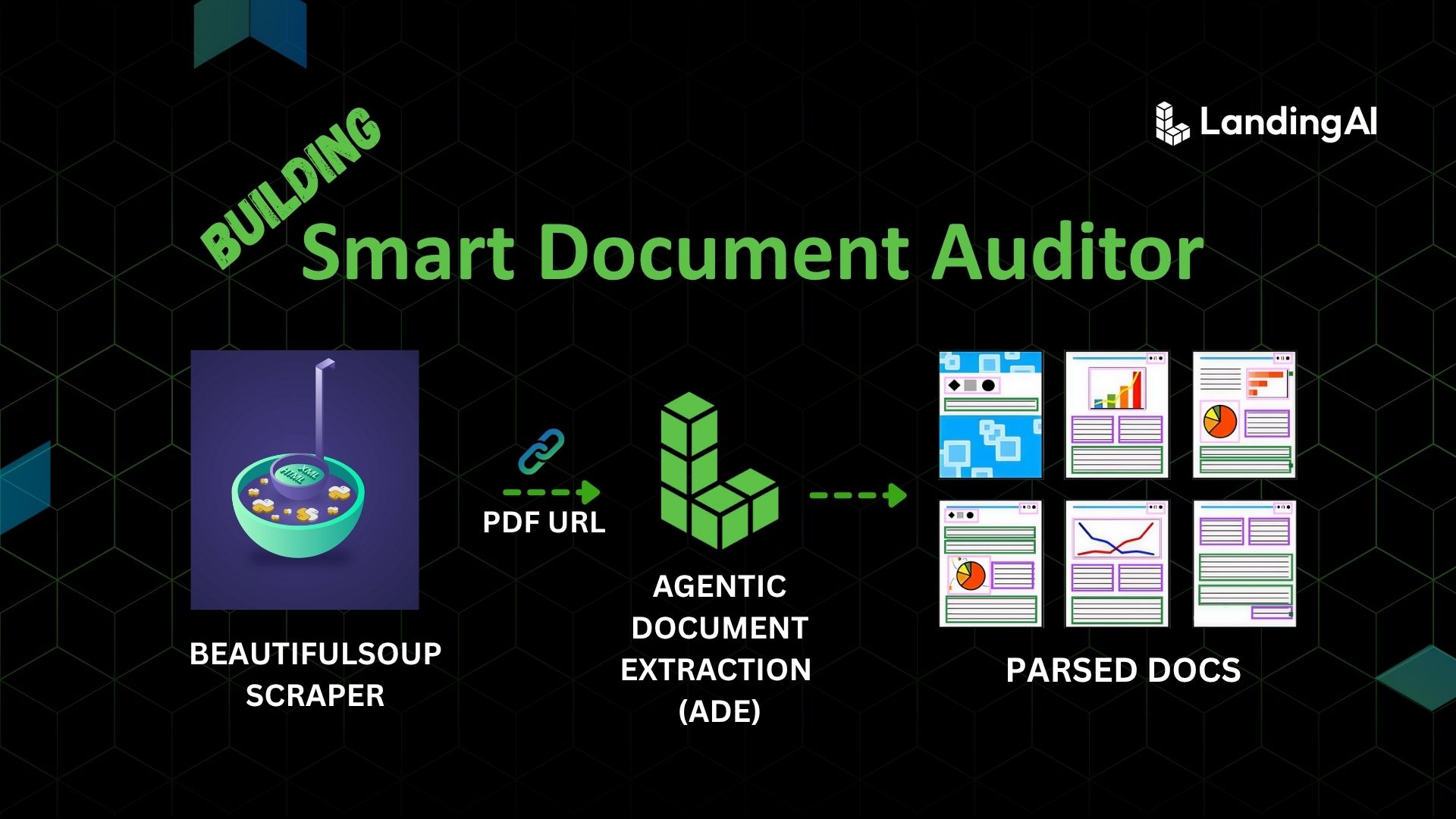

Public companies in the United States must file an annual Form 10‑K with the U.S. Securities and Exchange Commission (SEC). The 10‑K is a comprehensive financial report that includes a company’s history, organizational structure, financial statements, earnings per share and other disclosures[1]. These filings provide investors with a detailed snapshot of a company’s operations but are often hundreds of pages long and difficult to parse manually[2]. In this post we build a Smart Document Auditor that combines two Python tools—BeautifulSoup for locating relevant documents on the web and LandingAI’s Agentic Document Extraction (ADE) for extracting structured data from PDFs—to automate the retrieval and analysis of financial reports.

The SEC’s EDGAR database exposes 10‑K filings, but direct PDF versions of the latest reports are not always available; some filings are presented as interactive HTML (iXBRL) pages. To simplify our demonstration we select a financial report that is already published as a PDF: Apple Inc.’s FY2024 Q1 Consolidated Financial Statements. This document, available on Apple’s investor relations site as a PDF, contains condensed consolidated statements of operations, balance sheets and cash flows. By targeting a publicly accessible PDF we avoid the complexity of rendering interactive HTML while still demonstrating the end‑to‑end pipeline.

Why automate financial statement analysis?

Manual review of annual or quarterly reports is time‑consuming and prone to human error. Analysts must sift through pages of narrative and tables to locate key metrics, making it difficult to compare performance across periods or companies. Automating the extraction of critical data points accelerates due diligence, reduces mistakes and frees analysts to focus on interpretation rather than transcription. A smart auditing pipeline can also flag outliers across filings or highlight trends over time.

BeautifulSoup: Navigating HTML with ease

BeautifulSoup is a lightweight Python toolkit for pulling data out of HTML or XML. You can plug in your preferred parser (lxml, html.parser, or html5lib) and treat the markup as a navigable tree. Once the page is wrapped in a BeautifulSoup object, helper methods like find() and find_all() let you locate elements, filter by tag or attribute, and extract text or attribute values—no brittle string hacks required. Other HTML-parsing options exist ( lxml’s etree API, selectolax, Scrapy’s parsel, or full browser-automation stacks like Selenium and Playwright ), but for this tutorial we only need to discover a single PDF link before handing the file to ADE. BeautifulSoup hits the sweet spot: familiar, dependency-light, and powerful enough without the overhead of heavier frameworks[3].

Here’s a simple example that constructs a small HTML snippet, parses it with BeautifulSoup and prints every hyperlink:

from bs4 import BeautifulSoup

html_doc = """

<html><head><title>The Dormouse's story</title></head>

<body>

<p class="title"><b>The Dormouse's story</b></p>

<p class="story">Once upon a time there were three little sisters; and their names were

<a href="http://example.com/elsie" class="sister" id="link1">Elsie</a>,

<a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and

<a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>;

</p>

</body></html>"""

soup = BeautifulSoup(html_doc, "html.parser")

for link in soup.find_all("a"):

print(link.get("href")) # prints each URL

In our smart auditor pipeline we use BeautifulSoup to discover the download links for financial reports on a company’s investor relations page or the SEC’s EDGAR site. Once we identify the correct PDF URL, we can hand off the document to ADE for deeper analysis.

Agentic Document Extraction (ADE): Turning PDFs into structured data

Agentic Document Extraction is LandingAI’s tool for extracting structured information from visually complex documents such as PDFs and scanned images. The ADE Python library wraps LandingAI’s API and supports parsing local files, raw bytes or URLs; it returns both Markdown and structured chunks annotated with bounding boxes[4]. In the current context, some relevant features that you can appreciate:

- Multiple input types: You can parse local PDFs, images or a URL that points directly to a PDF file[4].

- Field extraction: Define a Pydantic model describing the fields you want (e.g. revenue, net income) and pass it to parse(); ADE populates the model with extracted values[5].

- Error handling & pagination: ADE automatically splits large PDFs into manageable pieces, retries failed requests and stitches the results together[6].

- Connectors: Built‑in connectors allow you to process entire directories, Google Drive folders or S3 buckets with a single call[7].

You install the library via pip install agentic-doc and set the environment variable VISION_AGENT_API_KEY with your LandingAI key. Then call parse() on a file path or URL to obtain a list of ParsedDocument objects containing Markdown, structured chunks and any extracted fields.

Quick Tip: You can save and display the associated chunks to verify that the extracted fields are visually grounded in the PDF, which improves trust and reliability.

Building the Smart Document Auditor

With our tools in place, the auditing pipeline consists of three steps:

- Locate the report: Use requests and BeautifulSoup to navigate to the company’s investor relations page and identify the correct PDF link. In our example we know the URL in advance: https://www.apple.com/newsroom/pdfs/fy2024-q1/FY24_Q1_Consolidated_Financial_Statements.pdf, which contains Apple’s FY24 Q1 consolidated financial statements.

- Download or link the PDF: You can download the report with requests or pass the URL directly to ADE, because this link points to a PDF. Downloading the file is useful if you want to archive a local copy or if network calls are restricted.

- Extract fields with ADE: Define a Pydantic model for the metrics you care about—total revenue, net income and diluted earnings per share. Call parse() with the PDF path or URL and pass the model via the extraction_model argument. ADE returns the populated model along with the document’s text and structured representation.

Example pipeline

The following code illustrates an end‑to‑end pipeline for our example report. It shows how to utilize beautifulsoup to find the pdf url from the page, preform field extraction of specific field using parse function form the agentic-doc library and analysing the extracted data:

import os

import requests

from bs4 import BeautifulSoup

from pydantic import BaseModel, Field

# from agentic_doc.parse import parse

import requests

from bs4 import BeautifulSoup

press_release_url = "https://www.apple.com/newsroom/2024/02/apple-reports-first-quarter-results/"

headers = {

"User-Agent": "SmartDocumentAuditor/1.0 (ankit.khare@landing.ai)",

"Accept-Language": "en-US,en;q=0.9",

}

resp = requests.get(press_release_url, headers=headers)

resp.raise_for_status()

soup = BeautifulSoup(resp.text, "html.parser")

# Find the link under the 'Consolidated Financial Statements' heading

pdf_link = None

for link in soup.find_all("a"):

if "View PDF" in link.get_text(strip=True):

pdf_link = link["href"]

break

if pdf_link:

# If the link is relative, prepend the domain

if pdf_link.startswith("/"):

pdf_url = "https://www.apple.com" + pdf_link

else:

pdf_url = pdf_link

print("Found PDF URL:", pdf_url)

else:

print("Could not find the PDF link.")

# Example code for using ADE to extract financial metrics

from pydantic import BaseModel, Field

from agentic_doc.parse import parse

import os

class FinancialMetrics(BaseModel):

total_revenue: float = Field(description="Total revenue in USD")

net_income: float = Field(description="Net income in USD")

diluted_eps: float = Field(description="Diluted earnings per share")

os.environ["VISION_AGENT_API_KEY"] = "***HR1MGZoZGdsY2hr0jNGV3RIVFJxak**"

# Parse the PDF directly from the URL

results = parse(pdf_url, extraction_model=FinancialMetrics)

# After calling parse()

metrics = results[0].extraction

print("Extracted metrics:", metrics)

# Access fields as attributes, not dict keys

revenue = metrics.total_revenue

net_income = metrics.net_income

eps = metrics.diluted_eps

profit_margin = net_income / revenue

print(f"Total revenue: ${revenue/1e9:.2f}B")

print(f"Net income: ${net_income/1e9:.2f}B")

print(f"Diluted EPS: {eps:.2f}")

print(f"Profit margin: {profit_margin:.2%}")

The FinancialMetrics model describes the numeric fields we want to capture. ADE’s parser examines the PDF, identifies the relevant tables and text and returns the populated model along with additional information such as confidence scores and bounding boxes. You can expand the model with more fields—cash flow from operations, research and development expense or any other item that appears in the statement—and ADE will extract them automatically.

Analysing the results

After extracting the metrics you can compute ratios or compare them across periods. For example, dividing net income by total revenue yields a profit margin. You might compare the extracted values against analyst forecasts or previous quarters to identify trends. A smart auditor could loop through multiple filings, persist results to a database and generate dashboards for stakeholders.

Conclusion

Form 10‑K filings and quarterly financial statements contain a wealth of information but can be daunting to analyse manually. By combining a BeautifulSoup—a versatile HTML parsing library—with Agentic Document Extraction, we can build a Smart Document Auditor that automatically retrieves a report and extracts structured data for analysis. In this post we simplified the workflow by choosing a publicly available PDF, but the same approach applies to other reports. As AI‑driven document understanding evolves, such workflows will become indispensable for investors, auditors and analysts who need to process large volumes of financial data efficiently.

What’s Next?

- Explore and build further using the code for the tutorial available on GitHub

- Start building with – LandingAI Agentic Document Extraction Python Library – don’t forget to star us 🙂

- Jam with our Developer Community on Discord

- Interested in Reading more – here our blog posts

References

[1] [2] 10-K: Definition, What’s Included, Instructions, and Where to Find It

https://www.investopedia.com/terms/1/10-k.asp

[3] Beautiful Soup Documentation — Beautiful Soup 4.4.0 documentation

https://beautiful-soup-4.readthedocs.io/en/latest

[4] [5] [6] [7] GitHub – landing-ai/agentic-doc: Python library for Agentic Document Extraction from LandingAI