What to Expect

This tutorial will show developers how to build a Visual AI application very quickly. We will write a Python program that detects produce items in a refrigerator and checks a supermarket flyer for applicable discounts. No prior computer vision experience is required.

We’ll be using VisionAgent, a generative AI app builder that helps developers – even those without a computer vision background – build visual applications easily and efficiently. Before diving into details, let’s take a moment to recap the challenges that engineers used to face when implementing vision tasks in their applications.

Background

Computer vision is a complex field of study due to the immense challenges in teaching machines to interpret and understand visual information in the way humans do. For most use cases, multiple intricate problems have to be solved simultaneously.

First, visual perception involves processing incredibly dense information. A single image contains millions of pixels, and each pixel represents nuanced color, texture, and spatial relationships that must be accurately interpreted by algorithms to rapidly distinguish objects, recognize patterns, and extract meaningful information from these pixel arrays. Second, objects can appear differently based on lighting, angle, partial obstruction, distance, and background context. A computer vision system must be robust enough to recognize a chair as a chair whether it’s viewed from the front, side, partially hidden, or in different lighting conditions. Third, semantic understanding goes beyond mere object detection. Advanced computer vision doesn’t just identify objects, but comprehends their relationships, potential actions, and contextual meaning. For instance, this would mean recognizing not just that an image contains a person and a bicycle, but understanding that the person is likely preparing to ride the bicycle.

Consequently, research and development in computer vision has consistently drawn from multidisciplinary approaches, integrating rigorous fields such as mathematics, computer science, optics, signal and image processing, cognitive science, statistics, and machine learning (ML), among others. Beyond technical proficiency, constructing effective computer vision systems demands an integrative methodology that synthesizes insights across these complex and interconnected disciplines, requiring deep experimental expertise and collaborative problem-solving.

Despite the availability of powerful tools like OpenCV and vision transformer-based Large Multi-Modal Models1, developers must still learn computer vision fundamentals and gain familiarity with the relevant SDKs and programming models to effectively integrate computer vision into domain-specific applications. In addition, organizations would also need to invest in significant computational resources as well as potentially have to obtain large training datasets. Nonetheless, thanks to advances in ML, this is changing rapidly, which will allow us to implement our vegetable detection and replenishment app very easily!

Let’s proceed to the hands-on portion of this tutorial. You are welcome to follow along by interacting with VisionAgent through its web UI and by reviewing the Python modules in the links referenced throughout the tutorial. The entire code repository will be also shared.

The README.md file provides the installation instructions (with Python 3.10 or higher).

Developing the “What’s in Your Fridge?” Application

We will write a Python program that detects produce items in a refrigerator and checks a supermarket flyer for applicable discounts. Hence, we will work with two images shown below:

- An image of produce items in the refrigerator; and

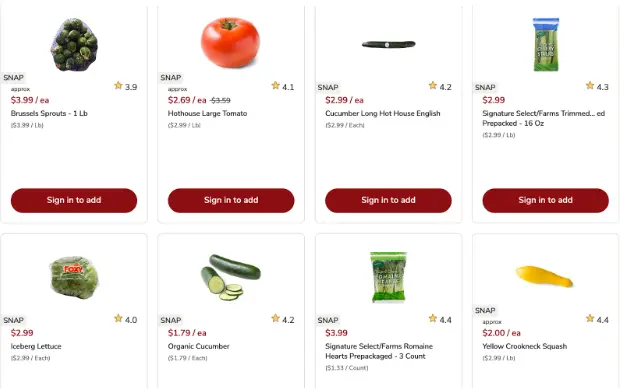

- A supermarket flyer image advertising the available discounts on produce.

Figure 1: Image of various foods, including produce items, in a refrigerator.

Figure 2: Image of a supermarket flyer circular, featuring sale prices on select produce items2.

Let’s use a design that breaks the problem down into two separate tasks and then combines their outputs as the third task:

- The first task is to detect the produce items in the refrigerator image (Figure 1) and return a dictionary, whose keys are the names of the vegetables (e.g., “tomatoes”, “onions”, etc.) with the values as their respective counts.

- The second task is to identify the sale price for each produce item in the specials flyer (Figure 2) and return a dictionary, whose keys are the names of the vegetables (e.g., “tomatoes”, “onions”, etc.) with the values as their respective discount prices.

- By this design, we would expect the sets of keys of both dictionaries to overlap, which would then enable us to report which items in the refrigerator are available on sale.

Intro to VisionAgent

VisionAgent makes it easy for developers without deep computer vision or AI backgrounds to solve vision tasks by providing a user-friendly interface to simply upload an image or a video and generate Visual AI code from a text prompt. All they would need to do is play with the generated code to get some intuition to zero in on the behavior they want, then test it, possibly adjust some thresholds, and do some code refactoring in order to integrate the modules directly into their production workflow.

Internally, VisionAgent utilizes agent frameworks, planning and reasoning constructs, and LLMs with tool calling, among other techniques to generate code to solve your computer vision task.

Using VisionAgent to Build Our App

We will interact with VisionAgent through its web UI, since it feels intuitive and easy to use. The inputs are an image3 and a text prompt, while the output is the information obtained from the image in accordance with the instructions given in the prompt. Importantly, the Python code that was executed by VisionAgent to generate the output is provided as well. We will save the Python modules generated for both tasks and adapt them to the needs of our application.

Hence, for each task, we will run through the following steps:

- Open the web UI of VisionAgent;

- Load an image and eyeball it to gain a “vibe” style understanding of what’s in it;

- Come up with a prompt that would cause the model to return these contents and paste the prompt into the UI; iterate on the prompt, until the output is as expected (or close).

- Click on the “Code” tab on the right and download the Python code to our local machine.

- Execute the code on our local machine and do any necessary testing and bug fixing.

As you review the steps outlined above, the first four are likely to be self-explanatory. However, step 5 may raise a question about its purpose and necessity. After all, wouldn’t we expect the agent, whose implementation relies on powerful LMMs (Large Multi-Modal Models) and LLMs (Large Language Models) to return “the correct answer” that “just works” that we could plug in? Actually, the situation is more nuanced, so please keep reading, and we will get to this shortly.

We are now ready to carry out these steps for each of the two tasks.

Task 1: Classification of Produce Items in the Refrigerator

We start by opening the VisionAgent web UI and uploading the image from Figure 1 into the appropriate field. We then examine what is displayed on the screen. We see romaine lettuce, different quantities of carrots, tomatoes, celery, mushrooms, and onions; plus some other stuff.

Now we want VisionAgent to classify the fridge’s contents and give us the code it came up with and executed for this assignment. The following prompt is chosen for this classification task4:

Identify and classify the different types of vegetables visible in the refrigerator, such as lettuce, carrots, and tomatoes. Focus on recognizing each vegetable and categorizing them based on their type.After a few seconds, the result appears on the screen:

I have analyzed the image and identified the vegetables in the refrigerator. Here's a summary of what I found: Vegetables found in the refrigerator: - Carrots: 2 - Tomatoes: 2 - Celery: 4 - Mushrooms: 1 I've also created an image with bounding boxes around the detected vegetables, which has been saved as 'vegetables_detected.png'. You can now view this image to see the visual representation of the detected vegetables.In addition, on the right hand side of the UI, we see the following output:

[{'vegetable_counts': {'lettuce': 0,

'carrots': 2,

'tomatoes': 2,

'celery': 4,

'mushrooms': 1,

'green onions': 0},

'summary': 'Vegetables found in the refrigerator:\n- Carrots: 2\n- Tomatoes: 2\n- Celery: 4\n- Mushrooms: 1\n',

'image_with_boxes': <a numpy array of bounding boxes>,dtype=uint8)}]A quick check of the agent’s output in relation to Figure 1 reveals that the agent did not detect the romaine lettuce. If the lettuce were obfuscated, then we could accept this omission. But the lettuce is recognized unambiguously. Hence, we will troubleshoot and fix the code to detect it.

To see the generated code, click on the “Code” tab on the right hand side of the VisionAgent web UI (next to the “Result” tab) and then the standard download icon to save the Python module to your local machine (or view vegetable_classifier.py shared here for convenience).

Next, we will test this module, troubleshoot and fix the issue with the missing lettuce, and also look for opportunities to improve code quality and robustness.

To test it, uncomment the line

# result = identify_vegetables(‘/home/user/gcrgkLJ_RefrigeratorContents11142024as01.png’)

(line 54), replace the generated argument with the path on your local machine to the image in Figure 1 (e.g., “workspace/RefrigeratorContents11142024as01.png“), and add the line print(result['vegetable_counts'])below to see the result. Then execute:

% python vegetable_classifier.pyin your terminal shell and confirm that the output matches the contents of the “vegetable_counts” dictionary above.

To investigate the reason for the missing lettuce, it is helpful to open vegetable_classifier.py in your favorite editor and do a quick code review. Scanning the identify_vegetables() method, we can summarize its essential functionality as the following pseudo code:

- Import the essential packages and libraries.

- Load the image into memory.

- Compose the list of basic class labels consisting of common vegetable names and combine them into a prompt.

- Call the method

owl_v2_image()with the image, prompt, and a tolerance parameterbox_thresholdas arguments, with thebox_thresholdvalue set to0.3(constant). - Parse the result by selecting the vegetables whose detection score exceeds the threshold of

0.3(same constant value as in the previous step).

To test our hypothesis that box_threshold may be impacting the sensitivity of the detection, we drill down into the imported vision_agent.tools module and scroll down to the definition of the owl_v2_image() method.

While OWLv25 is a very powerful computer vision object detection model6, what matters to us is that box_threshold plays a key role and has the default value of 0.1 (per line

box_threshold: float = 0.10, in the “tools.py” module).

Replacing the values of 0.3 with 0.1 for box_threshold throughout vegetable_classifier.py and rerunning it confirms that the lettuce is detected! Hence, we can now formally fix our identify_vegetables() method by including box_threshold (with the default value of 0.1) as an argument and using it instead of the constant floats. While at it, we can also delete the unused imports, make the code more efficient (replacing the dictionary initialization with a one-liner utilizing defaultdict), and add some basic error handling. These types of improvements require a minimal amount of effort, but they can significantly benefit the project by reducing maintenance costs. A revised version of vegetable_classifier.py is provided for reference, running which achieves the expected results.

Task 2: Detecting Sale Prices for Produce Items in the Flyer

We continue by creating a new chat in the VisionAgent web UI and uploading the image from Figure 2 (a supermarket circular). We can make a mental note that lettuce and tomatoes are among the produce items on sale. Recalling the contents of our refrigerator, this information will be useful for testing purposes.

Next, we prompt VisionAgent to scan the image and find deals on a sample list of vegetables:

Find vegetables in this image (e.g., lettuce, carrots, tomatoes, etc.) and record their prices. Return the results in JSON format.The agent goes to work and returns the following result:

Executing code.

Executing code.

Here are the vegetables found in the image along with their prices, sorted alphabetically: [ { "name": "Brussels Sprouts - 1 Lb", "price": "$3.99 / Lb" }, { "name": "Cucumber Long Hot House English", "price": "$2.99 / Each" }, { "name": "Hearts Prepackaged - 3 Count", "price": "$1.33/ Count" }, { "name": "Hothouse Large Tomato.", "price": "$2.69 / ea -$3.59" }, { "name": "Hothouse Large Tomato.", "price": "$2.99 / Lb" }, { "name": "Iceberg Lettuce", "price": "$2.99 / Each" }, { "name": "Organic Cucumber", "price": "$1.79/ea" }, { "name": "Organic Cucumber", "price": "$1.79 / Each" }, { "name": "Prepacked - 16 Oz", "price": "$2.99 / Lb" }, { "name": "Yellow Crookneck Squash", "price": "$2.99 / Lb" } ] Is there anything else you would like me to do with this data or any other questions about the image?The first thing we notice is that “Executing code.” appears twice. This is because VisionAgent employs a cycle containing a reasoning stage, an execution plan, and a judgement phase with feedback, which is repeated until the system deems its results as optimal. The rest is the list of vegetables and their sale prices in JSON format. The JSON output is also shown on the right:

['[\n {\n "name": "approx",\n "price": "$3.99 / ea"\n },\n {\n "name": "Hothouse Large Tomato.",\n "price": "$2.69 / ea -$3.59"\n },\n {\n "name": "SNAP",\n "price": "$2.99 / ea"\n },\n {\n "name": " SNAP",\n "price": "$2.99"\n },\n {\n "name": "Hothouse Large Tomato.",\n "price": "($2.99 / Lb)"\n },\n {\n "name": "Brussels Sprouts - 1 Lb",\n "price": "($3.99 / Lb)"\n },\n {\n "name": "Cucumber Long Hot House English",\n "price": "($2.99 / Each)"\n },\n {\n "name": "Prepacked - 16 Oz",\n "price": "($2.99 / Lb)"\n },\n {\n "name": "SNAP",\n "price": "$2.99"\n },\n {\n "name": "Organic Cucumber",\n "price": "$1.79/ea"\n },\n {\n "name": "SNAP",\n "price": "$3.99"\n },\n {\n "name": "approx",\n "price": "$2.00 / ea"\n },\n {\n "name": "Iceberg Lettuce",\n "price": "($2.99 / Each)"\n },\n {\n "name": "Organic Cucumber",\n "price": "($1.79 / Each)"\n },\n {\n "name": "Yellow Crookneck Squash",\n "price": "($2.99 / Lb)"\n },\n {\n "name": "Hearts Prepackaged - 3 Count",\n "price": "($1.33/ Count)"\n }\n]']The second observation is that some items repeat (e.g., “Hothouse Large Tomato” and “Organic Cucumber”), indicating that there is probably a bug somewhere in the generated code.

To start troubleshooting, click on the “Code” tab and then the download icon to save the Python module to your local machine (or view vegetable_price_detector.py shared for convenience).

To test the module, we uncomment the call to find_vegetables_and_prices() and replace its argument with the path on your local machine to the image in Figure 2 (e.g., “workspace/SafewayFlyer11142024as0.png“), and also uncomment the line print(result) below to see the result printed to standard output. Then execute:

% python vegetable_price_detector.pyin your terminal shell and confirm that the output matches the above from the VisionAgent UI.

Having verified the behavior of the code generated by the agent as baseline, we proceed to the code review. Scrolling down through find_vegetables_and_prices() in vegetable_price_detector.py, we immediately recognize the familiar object detection operation through the usage of the owl_v2_image() method. In addition, the image is processed by an OCR operation (stands for Optical Character Recognition) using the ocr() method, also imported from the vision_agent.tools module, to extract text containing the sale prices. The algorithm then computes the Euclidean distances between the bounding boxes of produce items and those of prices. The vegetable-price pairs having the shortest distance are deemed as the correct matches. However, as mentioned earlier, there is a bug with the implementation of this stopping criterion, causing duplicated prices to be associated with a given vegetable.

The fix turned out to be very simple. There is no need to maintain a list of prices for each vegetable. All we need to do is set the price if a minimum distance between the bounding box of the vegetable and that of a price exists (i.e., results[veg_name] = closest_price).

With this bug fix verified, we again parametrize find_vegetables_and_prices()with box_threshold (again using the default value of 0.1) and return a dictionary with cleaned up prices instead of the JSON object, since the dictionary format is better suited for our purposes.

We also pluralize “tomato” to read “tomatoes” so that the keys of the dictionaries returned by both modules used in our application are normalized. With those changes, a revised version of vegetable_price_detector.py is provided for reference and for verifying its behavior.

Note that we could make vegetable_price_detector.py more efficient by omitting the square root operation, since the squaring operation is non-negative and increasing and we just care about relative comparisons, not the actual distance. We welcome the readers to explore this and other improvement opportunities, while we turn our discussion to the final part: combining the two modules we have obtained with the help of VisionAgent in order to build our application.

Task 3: Integrating Both Scripts into an Application

Both of our scripts return dictionaries, whose keys are vegetable names. The two dictionaries can have keys in common by design. Thus, we can now write a main() program, which analyzes the images and prints out the names of produce items on sale and their deal price.

The main() program is provided in the replenish_veggies.py module. We use the argparse Python library to handle the passing of the two arguments, “--img_fridge_contents” and “--img_deals_flyer“, in any order. These images are then loaded from the WORKSPACE directory, specified by an environment variable.

We import identify_vegetables() and find_vegetables_and_prices() from their respective modules we developed with the help of VisionAgent for the previous tasks and execute them to obtain the vegetable_counts and the vegetable_deals dictionaries, respectively. We then loop through the vegetable_counts dictionary and print the sale price for the vegetables that are also found in the vegetable_deals dictionary. Here is the output:

You have 6 celery in your refrigerator. There are no specials on celery at Safeway today.

You have 1 lettuce in your refrigerator. SALE SPECIAL!!! You can get more lettuce for $3.99 at Safeway today.

You have 3 tomatoes in your refrigerator. SALE SPECIAL!!! You can get more tomatoes for $2.69 at Safeway today.

You have 4 mushrooms in your refrigerator. There are no specials on mushrooms at Safeway today.

You have 10 carrots in your refrigerator. There are no specials on carrots at Safeway today.

You have 2 green onions in your refrigerator. There are no specials on green onions at Safeway today.Now that we have our shopping list, we can head over to Safeway!

Conclusion

Until recently, developing computer vision applications required expertise across diverse scientific and technological disciplines, making it a challenging and costly endeavor. Today, using agentic workflows, developers can directly create AI solutions tailored to their specific operational needs using tools like VisionAgent, dramatically reducing time and technical barriers to implementation. Hence, it took us just a couple of hours to write an application that recognizes vegetables in one’s refrigerator and checks a supermarket flyer for deals on those items. Try building vision-enabled applications on VisionAgent for yourself, and… don’t forget to eat your veggies!

¹For example, open-weight models CLIP, BLIP, Qwen-VL, LLaVA; also certain commercial models.

² This image is copyright © Safeway, Inc.

³For other use cases, video clips up to 30 seconds in duration are also accepted as inputs.

⁴You are encouraged to experiment with different prompts and pick the best one for the use case.

⁵OWL stands for “Open-World Localization” (see https://huggingface.co/docs/transformers/en/model_doc/owlvit for background).

⁶See https://arxiv.org/abs/2306.09683 for the original research article and https://huggingface.co/docs/transformers/en/model_doc/owlv2 for general background and examples.