Your team processes hundreds of property insurance claims daily. Some arrive as PDFs, others as scanned images. One claim might list the policyholder’s name as “John Smith,” while another uses “Smith, John.” The data you need is in there somewhere, but getting it out consistently? That’s the challenge.

You need structured data—JSON objects with reliable field names and data types that your downstream systems can process. But documents don’t arrive structured. They arrive as chaos.

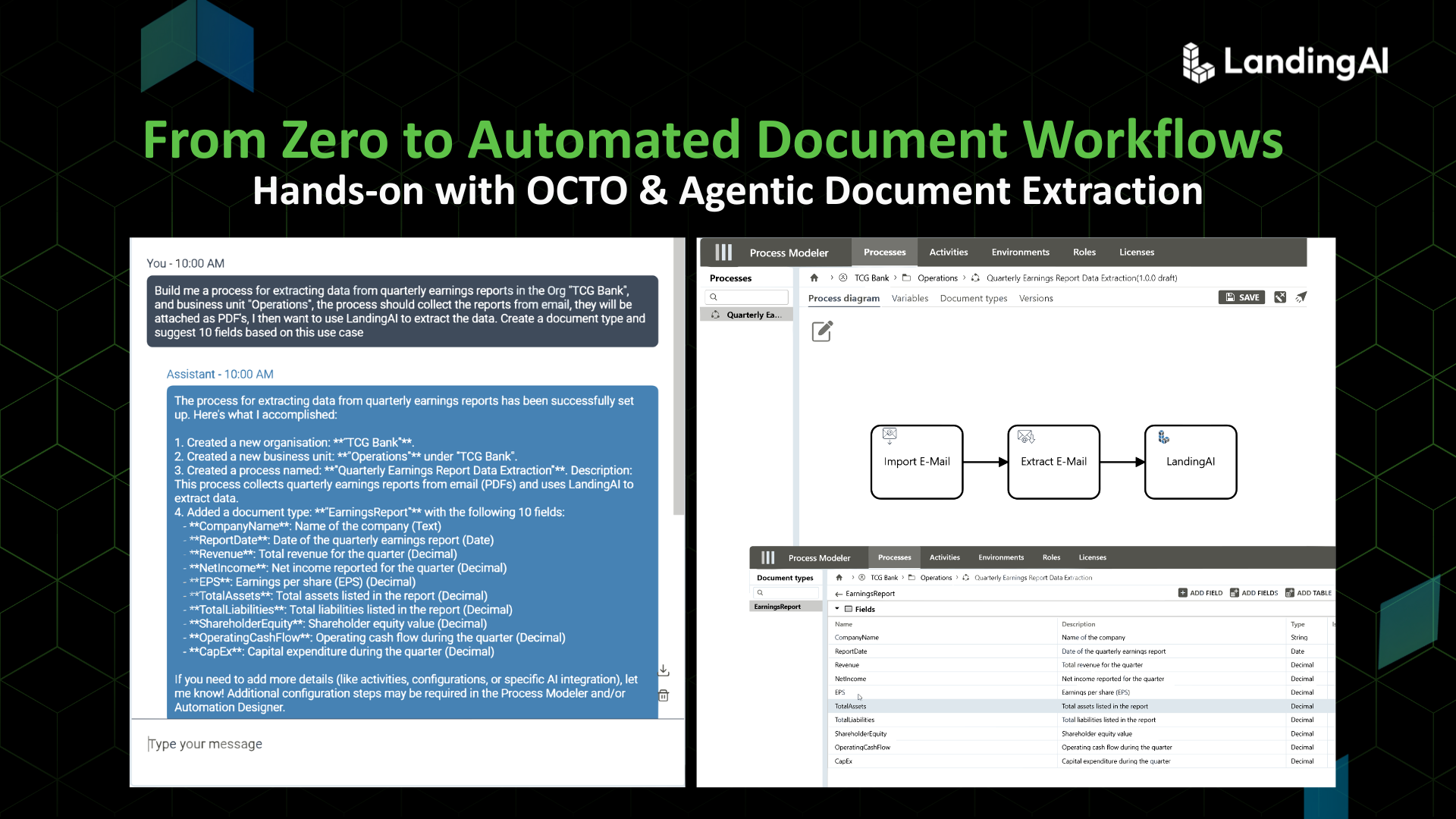

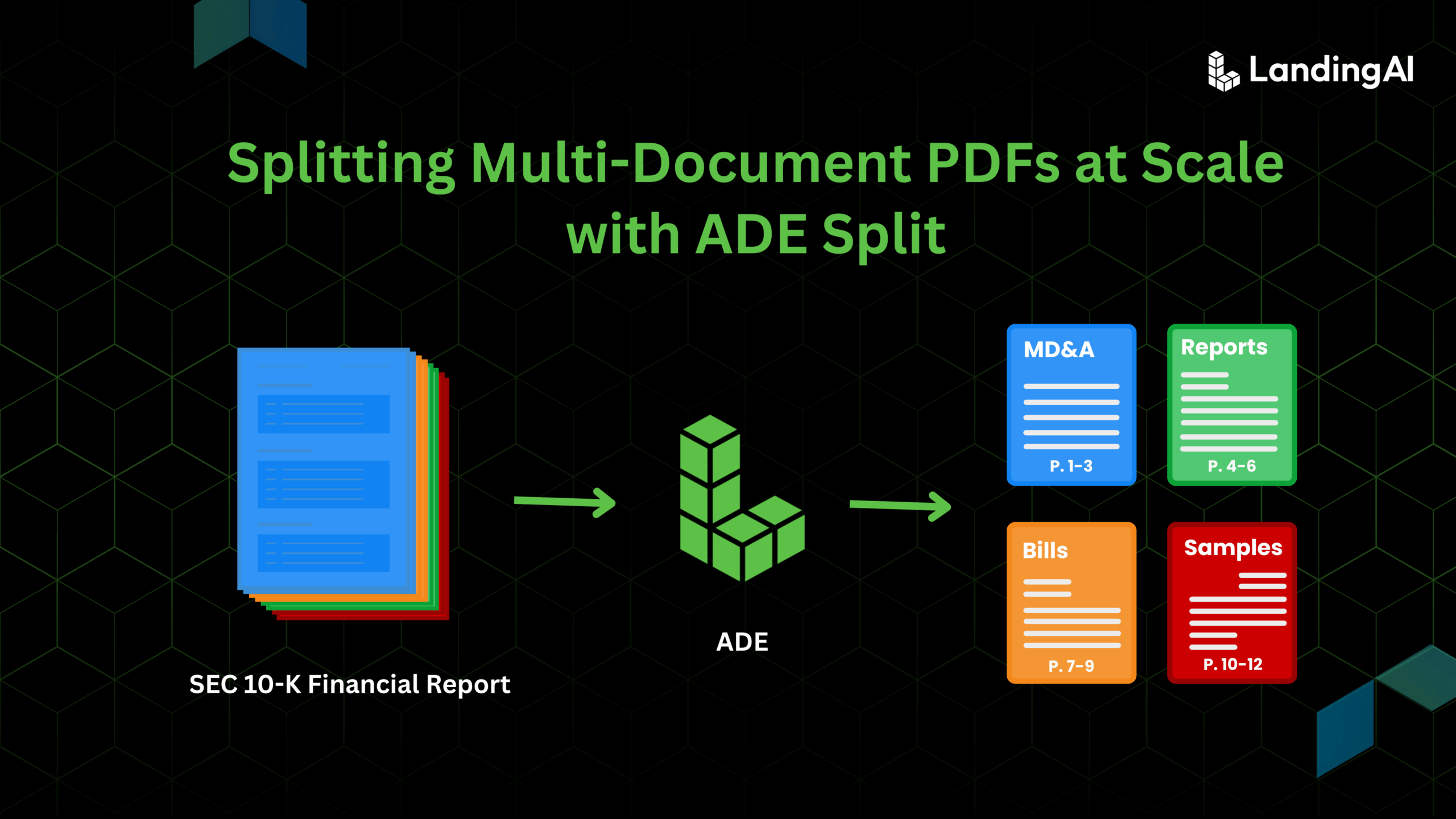

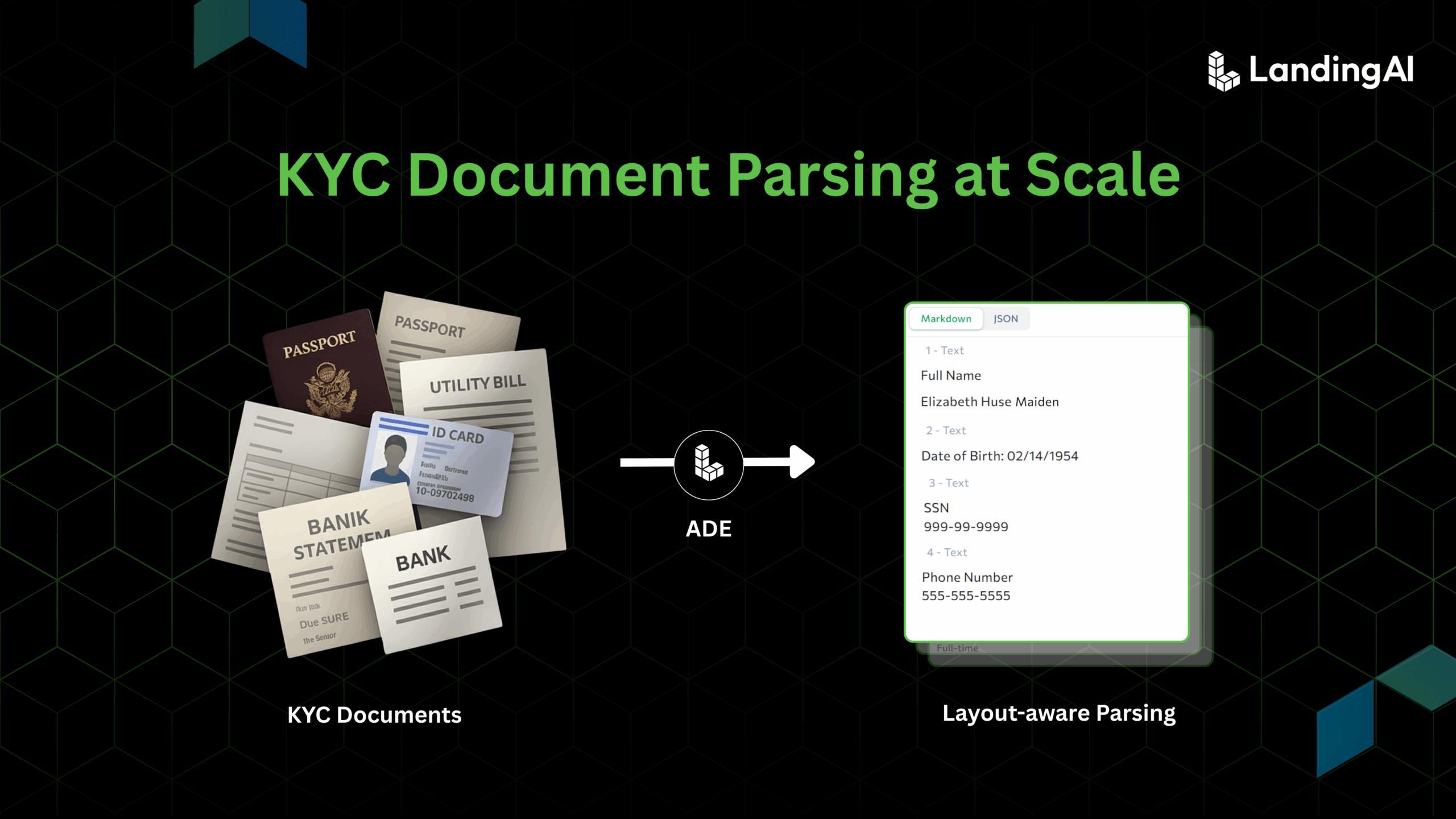

Agentic Document Extraction (ADE) from LandingAI solves this through a two-step process. First, the parse function converts your documents into clean Markdown. Then, the extract function pulls out only the specific fields you need from that Markdown and returns them as structured JSON key-value pairs.

That second step—extraction—is where schemas come in. An extraction schema defines exactly which fields to extract from your parsed content: field names, data types, and how everything should be organized. Get the schema right, and you set yourself up to scale document processing across thousands of files with consistent, reliable results.

Why Extraction Schemas Matter

Once the ADE parse function converts your documents into Markdown, the extract function uses your schema to identify and pull out only the data you specified. Think of the schema as your shopping list for data extraction. With it, you specify exactly what you need: the policy number as a string, the claim amount as a number in USD, and the list of damaged items as an array of objects.

The difference is specificity. And in industries like insurance, finance, banking, and logistics, specificity isn’t just helpful—it’s required for scaling your document processing pipeline.

Consider these scenarios:

Insurance Claims: You need to extract policyholder information, claim amounts, dates of loss, and lists of damaged property. Each field needs the right data type and structure to flow into your claims management system.

Bank Statements: Account numbers, transaction lists, opening and closing balances—all with specific data types and formats. Your reconciliation system expects numbers as actual numbers, not strings.

Logistics Documents: Bills of lading contain nested information: shipper details, consignee details, and line items with quantities, weights, and dimensions. The hierarchy matters for tracking and routing.

Financial Invoices: Line items, tax calculations, payment terms, vendor information. The structure needs to match your accounting system’s expectations to process payments correctly.

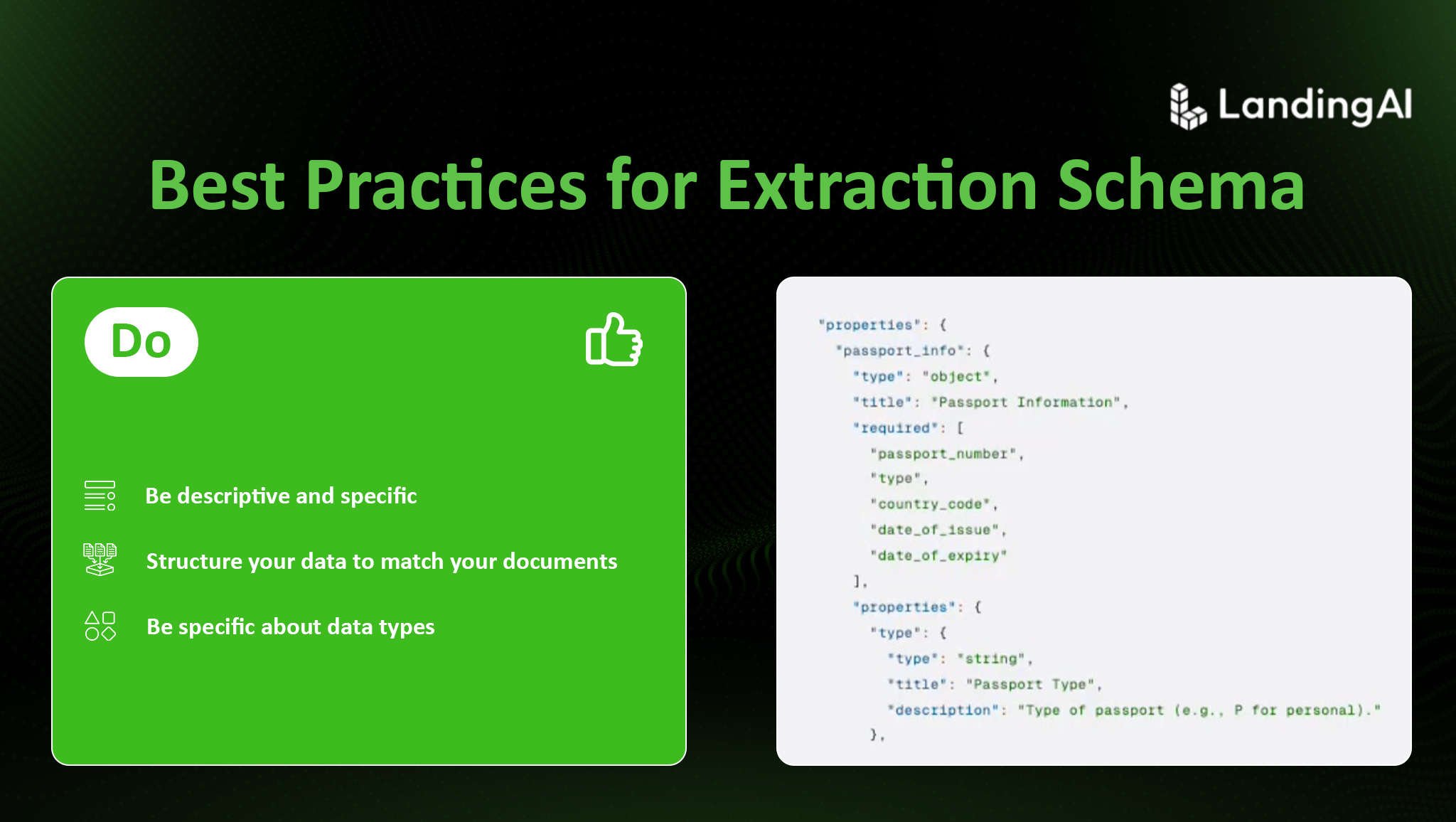

Three Best Practices for Extraction Schemas

A well-designed extraction schema sets you up for success at scale. Follow these three practices to create schemas that extract clean, consistent data from your documents.

1. Be Descriptive and Specific

The field names and descriptions you write guide the extraction engine. Specific, detailed definitions lead to accurate extraction.

Field names should clearly indicate what data you’re extracting. Compare the two approaches below. The second version leaves no room for ambiguity. When processing bank statements, you might encounter account numbers, routing numbers, check numbers, and transaction numbers. A specific field name like routing_number ensures the extraction engine identifies the correct value.

"number": {

"type": "string"

}

versus:

"routing_number": {

"type": "string",

"description": "Nine-digit ABA routing number for the bank"

}

Descriptions provide additional context that improves extraction accuracy. The more specific you are, the better your results. For example, check out the approach below. This description specifies:

- The time period (annual)

- The currency (USD)

- What to exclude (state taxes and fees)

That level of detail helps the extraction engine identify the correct total, even when multiple totals appear in the document.

"total_premium": {

"type": "number",

"description": "Annual premium amount in USD, excluding state taxes and fees"

}

2. Structure Your Data to Match Your Documents

Organize your schema to reflect both how data appears in your parsed Markdown and how you’ll use it in your application.

Match the document flow: If vendor information appears at the top of an invoice and line items at the bottom, list those fields in your schema in the same order. This helps the extraction engine follow the natural flow of the content.

Use nested objects for hierarchical data: Some information is naturally grouped. For example, insurance policies have policyholders with addresses, and invoices have vendor information with contact details. Preserve that structure:

{

"type": "object",

"properties": {

"policyholder": {

"type": "object",

"properties": {

"name": {

"type": "string",

"description": "Full name of the policyholder"

},

"address": {

"type": "object",

"properties": {

"street": {

"type": "string",

"description": "Street address"

},

"city": {

"type": "string",

"description": "City name"

},

"state": {

"type": "string",

"description": "Two-letter state code"

},

"zip": {

"type": "string",

"description": "Five or nine-digit ZIP code"

}

}

}

}

}

}

}

This structure mirrors how the data appears in the document and creates a logical grouping for your application code. Instead of managing separate policyholder_name, policyholder_street, policyholder_city fields, you have a clean policyholder object with nested address details.

3. Be Specific About Data Types

Choosing the right data type for each field ensures your extracted data works correctly in downstream systems. Use these data types strategically:

- string: Text values like names, addresses, or descriptions

- number: Monetary values, measurements, or any value you’ll calculate with

- integer: Counts, quantities, or whole numbers

- boolean: True/false flags

- array: Lists of similar items

- object: Grouped or nested information

A common mistake? Using string for monetary values when you should use number:

// This causes problems in downstream calculations

"claim_amount": {

"type": "string",

"description": "Total claim amount"

}

// This works correctly in your application

"claim_amount": {

"type": "number",

"description": "Total claim amount in USD"

}

When you extract monetary values as strings, your application code needs to parse and convert them before performing calculations. That adds complexity and introduces opportunities for errors. Extract them as numbers from the start, and they’re ready to use.

The same principle applies to dates, quantities, and any other values that your system will process as specific data types. Getting this right in your schema saves conversion work and prevents type mismatch errors downstream.

Putting It All Together: Insurance Claim Form

Here’s a complete schema for an insurance claim form that demonstrates all three best practices:

{

"type": "object",

"properties": {

"claim_number": {

"type": "string",

"description": "Unique claim identifier"

},

"policy_number": {

"type": "string",

"description": "Policy number associated with this claim"

},

"date_of_loss": {

"type": "string",

"description": "Date when the loss occurred in YYYY-MM-DD format"

},

"claim_type": {

"type": "string",

"enum": [

"Property Damage",

"Bodily Injury",

"Collision",

"Comprehensive",

"Theft"

],

"description": "Type of claim being filed"

},

"policyholder": {

"type": "object",

"properties": {

"name": {

"type": "string",

"description": "Full name of the policyholder"

},

"phone": {

"type": "string",

"description": "Contact phone number including area code"

},

"email": {

"type": "string",

"description": "Email address for claim correspondence"

}

}

},

"damaged_items": {

"type": "array",

"description": "List of damaged property or injuries",

"items": {

"type": "object",

"properties": {

"item_description": {

"type": "string",

"description": "Description of damaged item or injury"

},

"estimated_value": {

"type": "number",

"description": "Estimated repair or replacement cost in USD"

}

}

}

},

"total_claim_amount": {

"type": "number",

"description": "Total amount being claimed in USD"

}

},

"required": [

"claim_number",

"policy_number",

"date_of_loss",

"claim_type",

"total_claim_amount"

]

}

This schema demonstrates the three best practices:

Descriptive and specific: Field names like claim_number, policy_number, and date_of_loss clearly indicate what data to extract. Descriptions provide additional context—the date format, currency, and what each field represents.

Structured to match the document: The schema groups related information into nested objects (policyholder contains name, phone, and email). The damaged_items array handles repeating information. Fields are organized in a logical order that matches how claim forms typically present information.

Correct data types: Monetary values like estimated_value and total_claim_amount use the number type for downstream calculations. Text fields use string. The damaged_items array uses object for each item, allowing you to extract multiple damaged items with their associated values.

This schema returns only the specified fields as clean, structured JSON that flows directly into a claims management system without additional transformation.

Getting Started

Ready to build your first extraction schema? Here’s your path to success:

- Identify your use case: What document type are you processing? What fields do you need? Start with the most critical fields rather than trying to extract everything at once.

- Build your proof of concept: Use the ADE Playground to create and test your schema. The playground’s visual interface guides you through defining fields, choosing data types, and validating your schema against real documents.

- Refer to the full documentation: For detailed technical specifications, supported JSON Schema keywords, and advanced features, see the Extraction Schema documentation.

- Scale to production: Once your schema extracts data consistently in the playground, integrate it into your production pipeline using the ade-python library, or call the Parse and Extract APIs directly.

A well-designed extraction schema is your foundation for scaling document processing. Take the time to be descriptive and specific, structure your data thoughtfully, and use the right data types—and you’ll have a schema that produces consistent, reliable results across thousands of documents.

Ready to get started? Try the ADE Playground and see how a well-designed schema extracts exactly the data you need from your parsed documents.