TECH BRIEF

How to Integrate Deep Learning Into Existing Platforms

Key factors that operators should consider when deploying deep learning in existing computer vision applications

For decades, industrial automation and machine vision have helped manufacturers improve process control and product quality. Both technologies aim to remove human error and subjectivity with tightly controlled processes based on well-defined, quantifiable parameters. MV has become an essential tool for improving manufacturing productivity and throughput, while reducing waste and driving revenue growth.

MV includes technology and methods to extract information from an image. It’s a mature segment of the industrial automation landscape that substitutes human visual sense with a camera and computer to perform inspection and many other tasks such as guidance, localization, counting, gauging, measurement, and identification.

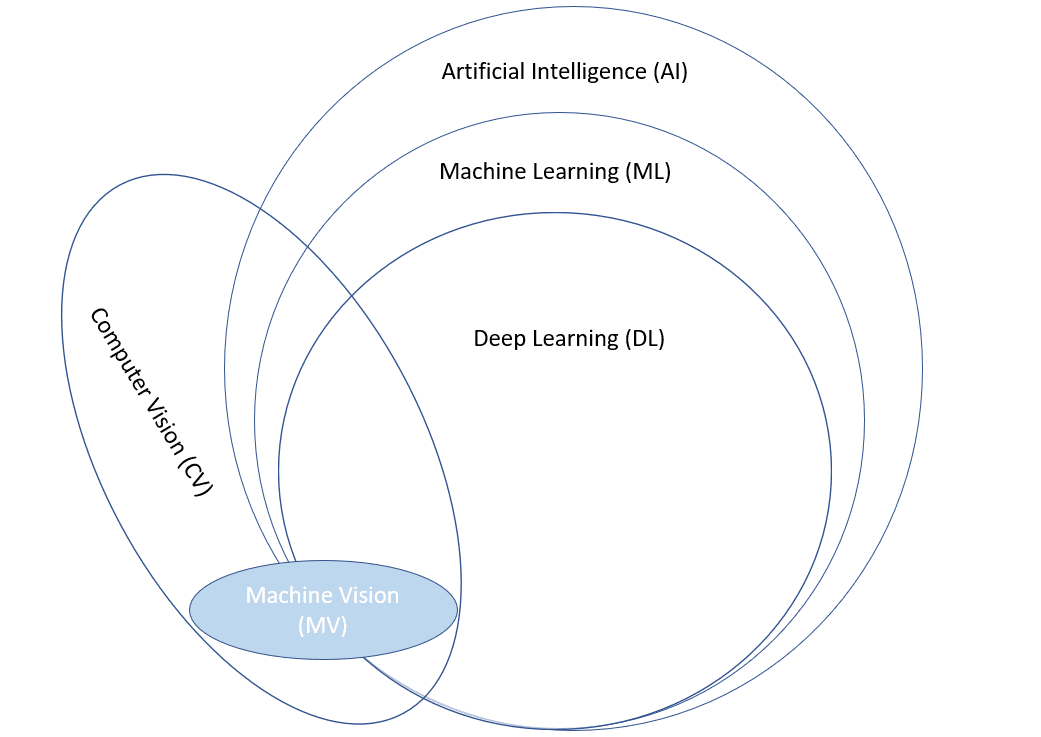

In contrast, deep learning (DL) is relatively new on the scene, having over the last 5–10 years become a useful machine learning (ML) tool that can be applied in MV system implementation and integration in the industrial automation environment. Before delving into the key factors that must be considered when deploying DL in existing MV applications, it is important to understand the difference not only between MV and DL but also artificial intelligence (AI) and computer vision (CV).

Clear Up the Confusion

Some would argue that the term “artificial intelligence” has been widely misused in the industrial automation environment, especially as it relates to MV. AI really is a science. John McCarthy, who coined the term back in the 1950s, noted that AI is the science and engineering of making intelligent machines. However, the term is often erroneously used in today’s MV marketplace as a substitute for describing the execution of an AI technique called DL.

AI encompasses ML and DL. ML encompasses DL, and CV encompasses MV. While CV is a science, some use the term to describe DL/AI techniques for object classification, which helps computers to “see” perceptively, in a way that mimics humans and the learning process. However, the science and academic part of CV include much more than that. Historically, MV is a subset of CV and AI, with its practical implementation mostly focused on industrial automation.

Consequently, when someone mentions CV, it’s always best to clarify whether they are referring to DL or if they’re talking about analytical MV techniques. To eliminate confusion, it’s best to use CV when referring to the broader science and remember that MV is a subset of CV that deals with the practical implementation of the science focused on industrial automation.

Define Machine Vision

MV in general is a broadly successful and thriving market, characterized by technologies and solutions supplied to an extensive and expanding customer base within industrial automation. The MV market is projected to grow well into the future, and it’s actively supported by various trade associations including the Association for Advancing Automation, European Machine Vision Association, and the Japan Industrial Imaging Association.

An MV system is a collection of components including camera, sensors, computing devices, lenses, optics, illumination sources and lighting, and software and applications. MV engineering and solution development typically involves certified vision professionals, engineers, and systems integrators to develop application-specific solutions that improve products and processes.

The concepts of smart manufacturing and Industry 4.0 have benefited tremendously from the data provided by MV and associated technologies such as robotics and other automation. An MV system includes three specific processes: image acquisition, image analysis, and data/results integration, which are associated with performing inspections and facilitating industrial automation.

Image acquisition involves acquiring an image that can provide the information an application needs to do its job. Image analysis refers to the overall process of extracting information from the image, which includes preprocessing, feature extraction, object location, segmentation, identification, measurement, and more. Data/results integration involves deciding if the part is good or defective and if defective for example, communicating the result to a reject mechanism linked to the automation process.

Understand How Machine Vision and Deep Learning Differ

Regardless of whether the MV application involves DL, tying results back to the process is critical for success, enabling the MV application or a hybrid MV/DL application to improve the automation and the process.

Traditional rule-based, or analytical MV systems, for example, deliver consistent performance when defects are well defined. However, it may be difficult or impossible for analytical algorithms to reliably detect defects such as scratches or dents that can vary significantly in size, shape, location, and appearance from one piece to the next.

In contrast, DL software relies on example-based training and neural networks to analyze defects, find, and classify objects, and read printed markings. Instead of relying on engineers, systems integrators, and MV experts to tune a unique set of parameterized MV tools until the application requirements are satisfied, DL relies on operators, line managers, and other subject matter experts to label images. By showing the DL system what a good part looks like and what a bad part looks like, the DL software is able to distinguish between good and defective parts, as well as classify the type of defects present.

Know the Strengths and Weaknesses of Deep Learning

DL is tremendous where subjective decisions must be made — here it’s easy to show the system what a good part looks like in an image and what a bad part looks like in an image or what anomalies or features of interest it should flag. DL requires a human or team of humans in the loop to make those subjective decisions initially and label the images, which are then used to train the DL system and models.

DL has a tremendous advantage over MV’s analytical tools when it comes to confusing scenes or scenes with confusing texture — situations where the identification of a specific feature in the scene is difficult due to high complexity or extreme variability of the image. Examples would include identifying lumber, textile, or metal surface defects.

DL is not particularly well suited for measurements, gauging, finding the precise location of features of interest, and result parameterization. Many may not fully understand the advantage that result parameterization provides. For example, if an analytical MV system rejects parts with defects measuring 1 mm or larger in size, and too many parts are failing, then the operator can change that parameter in the software to 1.25 mm, loosening up the parameter and decreasing the number of failed parts for that defect.

In contrast, the DL environment requires training of these defect classes by labeling images. If suddenly too many parts are failing, without parameterization, there’s no way to make a simple parameter adjustment. Instead, all the images must be relabeled to favor larger defects as failures, and there’s no guarantee it will work as desired. So, an important weakness of DL is not being able to do result parameterization in the MV system, which is one key consideration that affects acceptance on the factory floor.

Consider a Hybrid Approach

DL as an analysis tool within the systems integration of MV is a tremendously valuable approach that can have a broad value proposition for a wide range of applications — not just the difficult or challenging tasks. For example, taking a hybridized approach can help address the parameterization limitations of DL.

In such a hybrid approach, DL software would first classify potential candidate defects. Then, a traditional analytical MV caliper tool could measure all the candidate defects to decide whether the defect is too big or too small. While that’s just one scenario where a hybrid approach can minimize the impact of DL limitations, there are many more situations where hybridization can improve overall results.

The DL platforms available today do not require any MV or DL experience. Such scalable platforms provide an environment in which collaborative model development by teams of subject matter experts can take place. Using such DL software, the teams can quickly and easily label images, check errors, and iteratively train the model to improve it continuously over time.

Look at Chaining Deep Learning and Analytical Tools

It’s always important to analyze the application requirements and make sure that the solution fits the needs of the application, that it’s practical, and even feasible. Often, end users and integrators alike try to apply DL to applications that are technologically either too difficult, impractical, or perhaps even impossible from the point of view of imaging and deployment.

Another disadvantage of DL is that it’s impossible to know in advance what the execution or the capability will be. But as more DL systems are deployed, chaining of DL and analytical tools has become a common way get around some MV and DL challenges. In fact, each can benefit from the other. DL improves MV, and MV improves DL.

Just as adding analytical tools with DL addresses its parameterization limitations, the power of traditional analytical tools can be enhanced by DL inference. DL scientists call this model chaining. Another term for it could be tool chaining. Think of using MV preprocessing and postprocessing tools in combination with DL inference to not only overcome the limitations of DL but also use DL to improve the results of analytical MV tools.

Weigh the Benefits and Liabilities of Machine Vision and Deep Learning

While experienced MV system architects, integrators, and developers can address most applications with traditional rules-based, analytical tools, there are some drawbacks to this approach. Analytical MV tools require development of a rules-based solution. This could potentially mean a solution that includes hundreds or maybe thousands of combinations of parameterized tools from the MV analytical tool arsenal to achieve satisfactory application results.

These analytical MV tools include everything from preprocessing and image analysis to post processing of the data and everything in between. Usually, this approach requires a high degree of parameterization, and the more complex the application gets, the more parameterized it must be. Parameterization allows for tuning of the inspection based on the analytical tools that have been selected.

A DL implementation workflow, in contrast, involves training on a data set. This approach is remarkably similar to how one might train a human inspector to make decisions about an inspection. It doesn’t matter whether it’s a difficult application or a relatively straightforward application; the workflow is the same.

Scalable DL platforms give operators, line managers, and subject matter experts with MV expertise the ability to tune the data set, the capability to look at what has been trained, compare the results, and then retrain as needed. Just as an operator may need to be retrained from time to time by showing where an error or defect was missed, a DL implementation may require images to be relabeled by subject matter experts from time to time.

Decide the Best Workflow for the Application

MV implementations generally require application-specific solutions An MV application at point A on an assembly line and another application at point B may each require a unique set of parameterized analytical MV tools. Each application solution would require a MV expert or system developer to research, program, test, validate, and adjust a unique set of parameterized MV tools until the application requirements are satisfied.

When the image, the process, or something on the part drifts and causes a difference in the inspection results, most operators may not completely understand how to adjust the parameters at A and B. What if there are hundreds of MV applications? Will the operator understand how to use, maintain, and manipulate all of them — each with different parameters, different analytical tools, and different implementations?

Probably not. Instead, they may most likely have to turn to the in-house MV expert, systems integrator, or developer to tweak the parameters as necessary. DL, however, democratizes that process. DL allows using the same exact workflow across every application on the assembly line. If having a consistent workflow improves the process and the operators’ and line managers’ usage of the technology, DL could be the best technology for the job.