In the fight against the coronavirus, social distancing has proven to be a very effective measure to slow down the spread of the disease. While millions of people are staying at home to help flatten the curve, many of our customers in the manufacturing and pharmaceutical industries are still having to go to work every day to make sure our basic needs are met.

To complement our customers’ efforts and to help ensure social distancing protocol in their workplace, LandingAI has developed an AI-enabled social distancing detection solution that can monitor social distancing and detect if people are keeping a safe distance from each other by analyzing real-time video streams from the camera.

For example, at a factory that produces protective equipment, technicians could integrate this social distancing software into their security camera systems to monitor the working environment with easy calibration steps. As the demo shows below, the detector could highlight people whose distance is below the minimum acceptable distance in red, and draw a line between to emphasize this. The system will also be able to issue an alert to remind people to keep a safe distance if the protocol is violated.

As part of an ongoing effort to keep our customers and others safe, and understanding that the only way through this is with global collaboration, we wanted to share the technical methodology we used to develop this software. The demos below will help to visually explain our approach to this social distancing monitoring which consists of three main steps: calibration, detection, and measurement.

Calibration

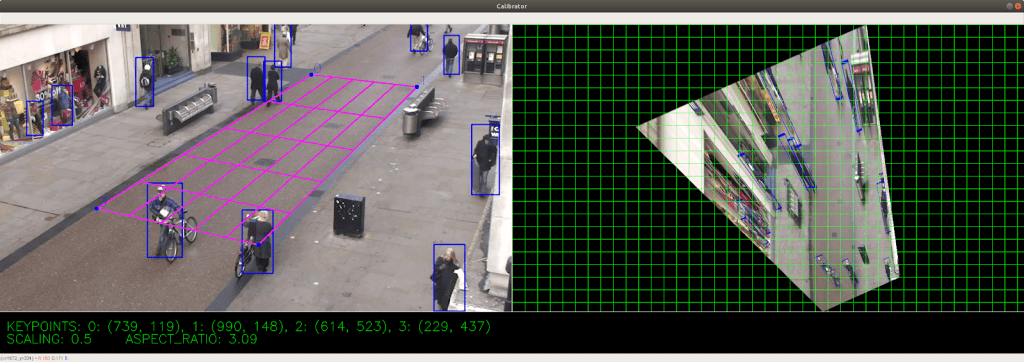

As the input video may be taken from an arbitrary perspective view, the first step of the pipeline is computing the transform (more specifically, the homography) that morphs the perspective view into a bird’s-eye (top-down) view. We term this process calibration. As the input frames are monocular (taken from a single camera), the simplest calibration method involves selecting four points in the perspective view and mapping them to the corners of a rectangle in the bird’s-eye view. This assumes that every person is standing on the same flat ground plane. From this mapping, we can derive a transformation that can be applied to the entire perspective image. This method, while well-known, can be tricky to apply correctly. As such, we have built a lightweight tool that enables even non-technical users to calibrate the system in real time.

During the calibration step, we also estimate the scale factor of the bird’s eye view, e.g. how many pixels correspond to 6 feet in real life.

On the left is the original perspective view, overlaid with a calibration grid. On the right is the resulting bird’s eye view. Note that the sides of the street lie completely parallel to the green grid.

Detection

The second step of the pipeline involves applying a pedestrian detector to the perspective views to draw a bounding box around each pedestrian. For simplicity, we use an open-source pedestrian detection network based on the Faster R-CNN architecture. To clean up the output bounding boxes, we apply minimal post-processing such as non-max suppression (NMS) and various rule-based heuristics; we should choose rules that are grounded in real-life assumptions (such as humans being taller rather than they are wider), so as to minimize the risk of overfitting.

Measurement

Now, given the bounding box for each person, we estimate their (x, y) location in the bird’s-eye view. Since the calibration step outputs a transformation for the ground plane, we apply said transformation to the bottom-center point of each person’s bounding box, resulting in their position in the bird’s eye view. The last step is to compute the bird’s eye view distance between every pair of people and scale the distances by the scaling factor estimated from calibration. We highlight people whose distance is below the minimum acceptable distance in red, and draw a line between to emphasize this.

As medical experts point out, until a vaccine becomes available, social distancing is our best tool to help mitigate the coronavirus pandemic and as we open up the economy. Our goal in creating this social distancing detection solution and sharing it at such an early stage, is to help our customers and to encourage others to explore new ideas to keep us safe.

Note: The rise of computer vision has opened up important questions about privacy and individual rights; our current social distancing monitoring system does not recognize individuals, and we urge anyone using such a system to do so with transparency and only with informed consent.