Overcoming Challenges in Deep Learning Model and Framework Comparison

Clean data provides a clear path forward

People across industries of all types rely on computer vision systems to improve productivity, enhance efficiency, and drive revenue. By augmenting a computer vision system with deep learning (DL), businesses can take their automation systems to new heights, due to the technology’s ability to deliver accurate, effective, and flexible automated inspection capabilities. Several computer vision platforms are available, and when it comes to comparing different deep learning models, you must make several considerations upfront.

In the ever-evolving world of machine learning (ML), new model architectures are constantly being developed, offering increasingly better performance on benchmark datasets. However, these benchmark datasets are often fixed, unlike the dynamic datasets that most companies encounter when deploying deep learning (DL) models in real-world applications, such as on factory floors. This makes it challenging to directly compare machine learning models, especially those using advanced deep learning architectures.

To ensure a fair and transparent evaluation, companies must follow several key guidelines when comparing models. Below are actionable tips on how to compare machine learning models effectively, particularly when dealing with complex deep learning systems.

How to Compare Machine Learning Models: 3 Essential Tips

Tip 1: Level the Playing Field

To ensure an unbiased comparison between two machine learning models, you should start with the data. Use the same datasets and data splits so that both models are trained and evaluated on identical inputs. For deep learning models, the test datasets must also reflect actual production data for relevance. Implementing agreement-based labeling can reduce ambiguity and ensure high-quality, consistent data.

Eliminating duplicate data in the training and test sets ensures that the models can’t “cheat” and deliver falsely high results. Comparable dataset sizes must also be used to train all models under evaluation, because if one dataset is significantly larger, it will typically yield better results. At the same time, the test sets must be large enough to allow a meaningful comparison, with a low metric variance. For instance, if a test set has only 10 defect images, then the difference between an 80% and a 90% recall could just be from random chance.

Tip 2: Consistent Models and Metrics

In addition to ensuring that datasets are on an even playing field, make sure that models under comparison are consistent. For instance, models must utilize comparable parameters, such as preprocessing and post-processing. If one model uses custom preprocessing or post-processing, this parameter should be added to the other model. Other examples include background subtraction, image registration, and morphology. Both models should also have comparable input image size. If a customer compares a model using 256 x 256 images to one using 512 x 512 images, the performances will differ.

Each model should be trained several times to account for randomness during training, and neither model should be overfit to the test set. Overfitting may occur when a long time is spent tuning hyperparameters, such as the batch size, learning rate schedule, number of epochs, and dropout probability, to improve development/test set performance.

Furthermore, ensure that the same metrics are being calculated during evaluation, as some metrics can have different implementations. Examples include the threshold value for defining true positives, false positives, and false negatives, and the format of output predictions that feed into the metrics code. Models should be compared using the appropriate metrics for the particular use case, as many visual inspection applications have inherent class imbalance. In these cases, metrics tied to precision and recall are preferred over those tied to accuracy, since accuracy can be biased toward more frequent classes.

Tip 3: Real-Life Example: Ditch the Duplicates

Seeking to evaluate its internal DL model against that of LandingLens, a global telecommunications company sent LandingAI a set of 113 images with individual bounding boxes, along with defect classes and results from its internal model — some of which were suboptimal. In a comparison of the company’s model against the LandingLens model, the initial LandingLens results were poor, and the company seemed content to stick with its existing solution.

Asking why this had happened, LandingAI found that the company ran its model using all its data, while LandingAI used just two of 15 different sets of images, causing LandingLens to underperform. LandingAI then took one individual dataset and ran LandingLens against the existing model to compare. Again, the LandingLens model underperformed — with a mean average precision (mAP) of 0.67 to 0.27.

Digging deeper, LandingAI engineers noticed that the company’s model was nearly perfect in terms of ground truth versus predictions. After additional research, the LandingAI team found that the two models used different augmentations and metrics, and calculated mAP differently, so the engineers decided to take a deeper dive into the data. Upon closer inspection, the LandingAI team found that after removing duplicates and highly similar images, only 41 of the 113 images were left, and only two bounding boxes. This meant that 64% of the data was duplicated. The existing model had memorized the training data, which was very similar to the development data, producing artificially high metrics.

Essentially, the company’s existing model was doing a better job of overfitting. The company was unaware that its model was using duplicate data, and the project helped everyone realize that models don’t really matter when the data is insufficient. Starting with a clean dataset without duplicates would have produced much better results, much faster. So the company began using LandingLens to label images, reach consensus, and quickly build a model based on good data to avoid such issues in the future.

Summary: Data Lights the Way

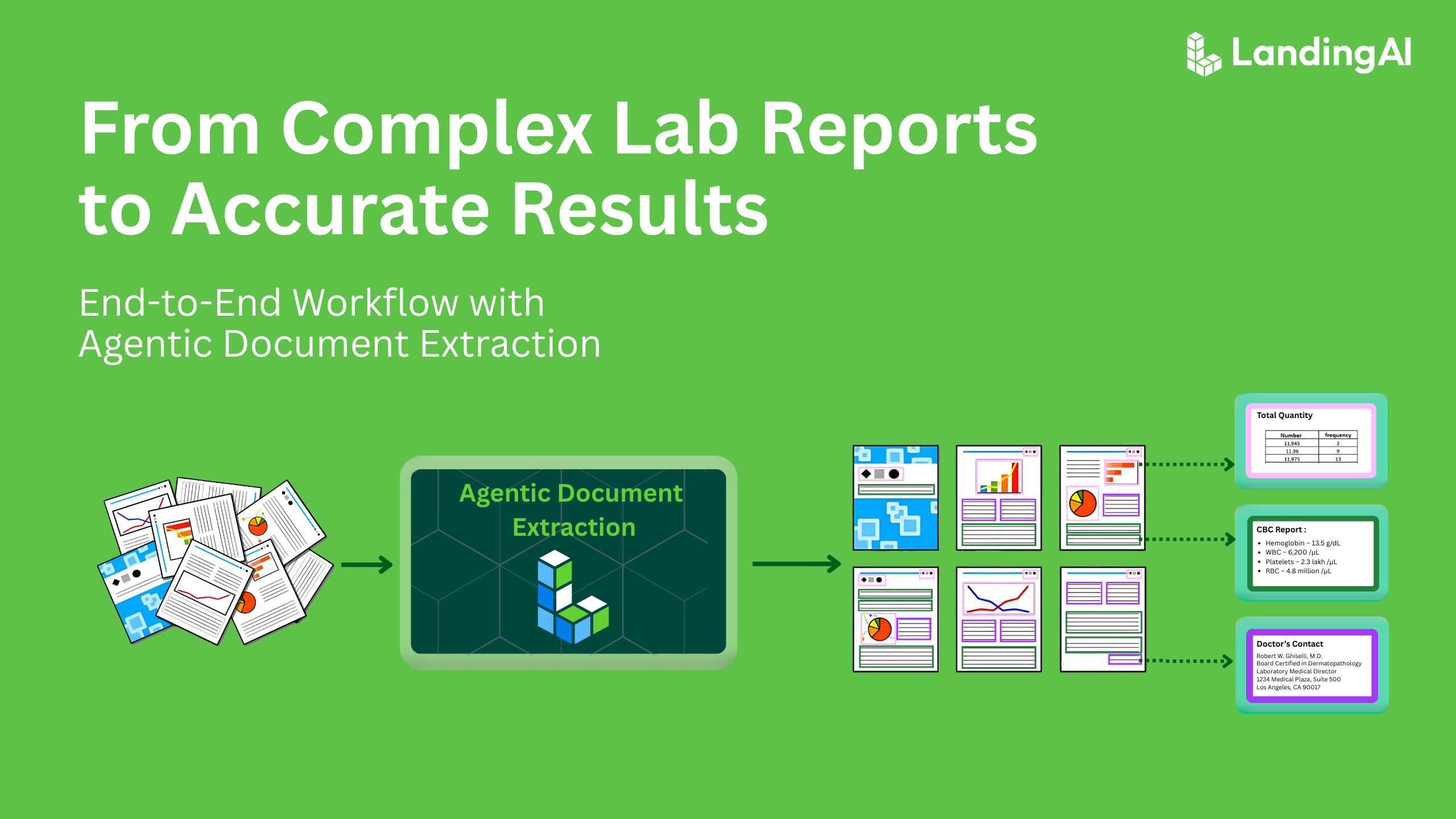

When conducting a deep learning model comparison for automated inspection, the evaluation process should start with data. A data-centric approach emphasizes the quality of the data used to train deep learning models, rather than tweaking the model architecture or adjusting statistical sampling methods. This means prioritizing accurate classification, grading, and labeling of defect images instead of focusing solely on the volume of data.

AI research shows that if 10% of your data is mislabeled, you’ll need 1.88 times more new data to achieve the same level of accuracy. If 30% of the data is mislabeled, the requirement jumps to 8.4 times as much new data compared to working with clean datasets. Incorporating a data-centric strategy during your deep learning frameworks comparison can save significant time and effort by ensuring higher-quality data from the start.

A data-centric deep learning platform, like LandingLens software, offers valuable tools such as the digital Label Book. This feature enables multiple team members—regardless of location—to collaboratively define defect categories for specific use cases. The platform also automatically detects outliers in labeled data, fostering consensus among quality inspectors and enhancing the performance of your deep learning models.

Following the right set of guidelines and focusing on obtaining clean, labeled data will set you up to train and deploy DL models to succeed in your computer vision solutions.