Introduction

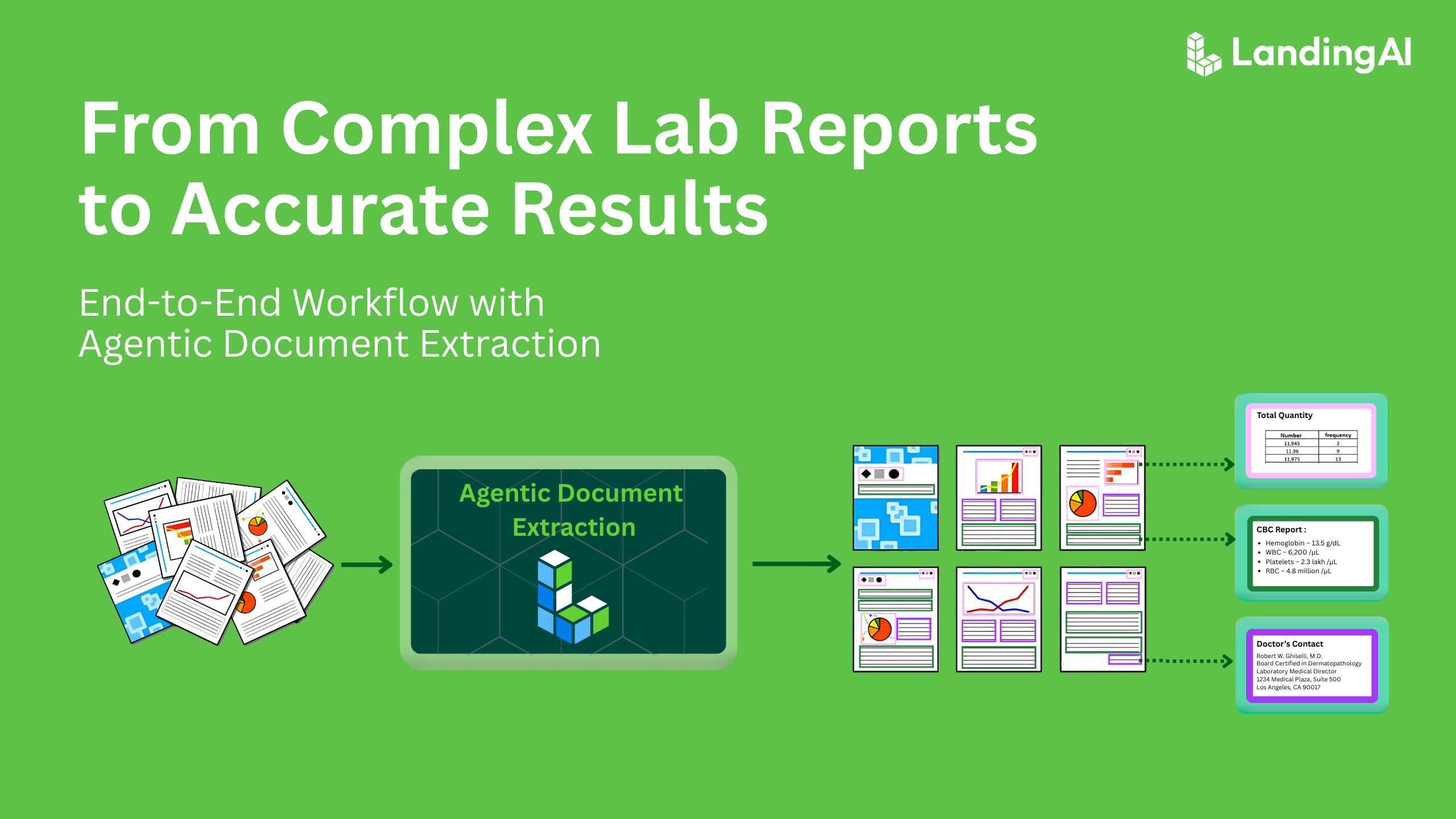

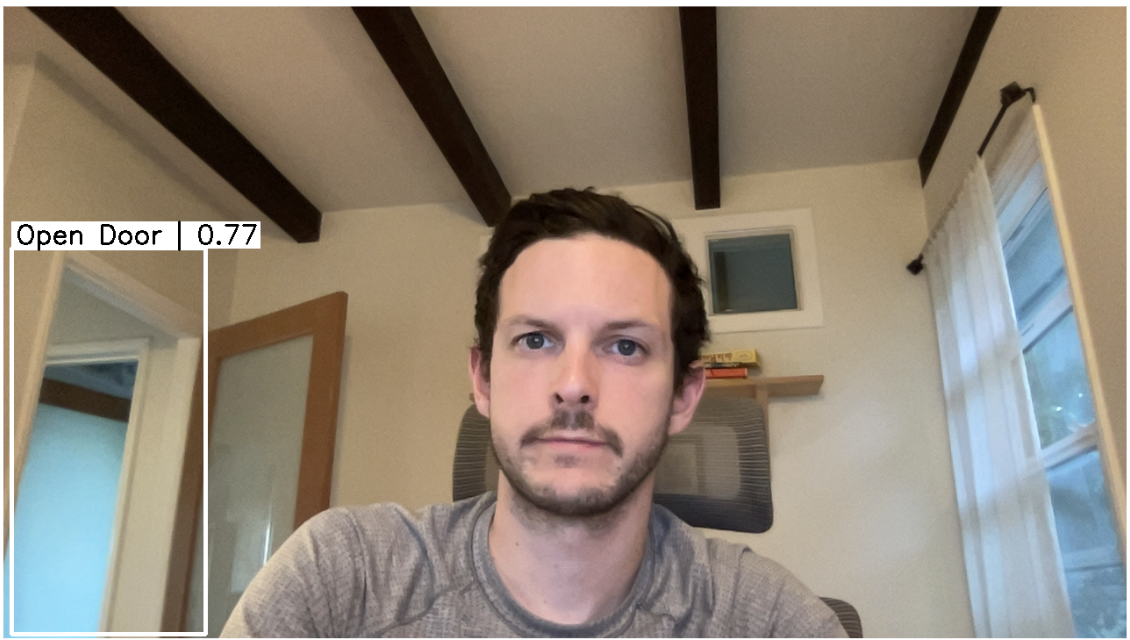

In this tutorial, you will learn how to build a simple “Open Door Detector” app with the computer vision platform, LandingLens. You will then learn how to deploy it to the cloud and use the landingai-python library to predict new images from your terminal. If you use Zoom—or any other video conferencing tool—this custom computer vision software model could be used to automatically detect if you left your door open in the background!

Requirements

- LandingLens (register for a free trial here)

- Python (install for free here)

- Access to your computer’s terminal

- Webcam

- A door that you can open and close

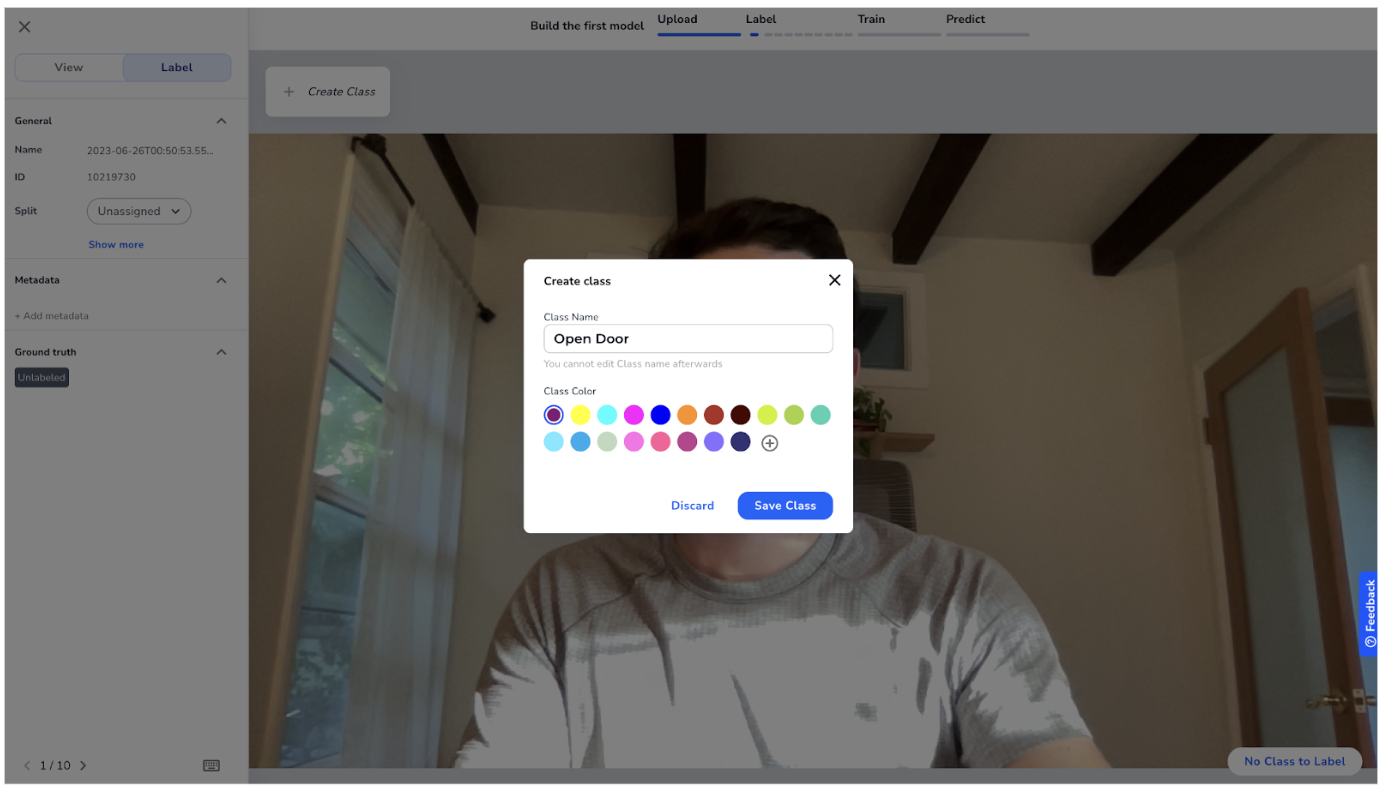

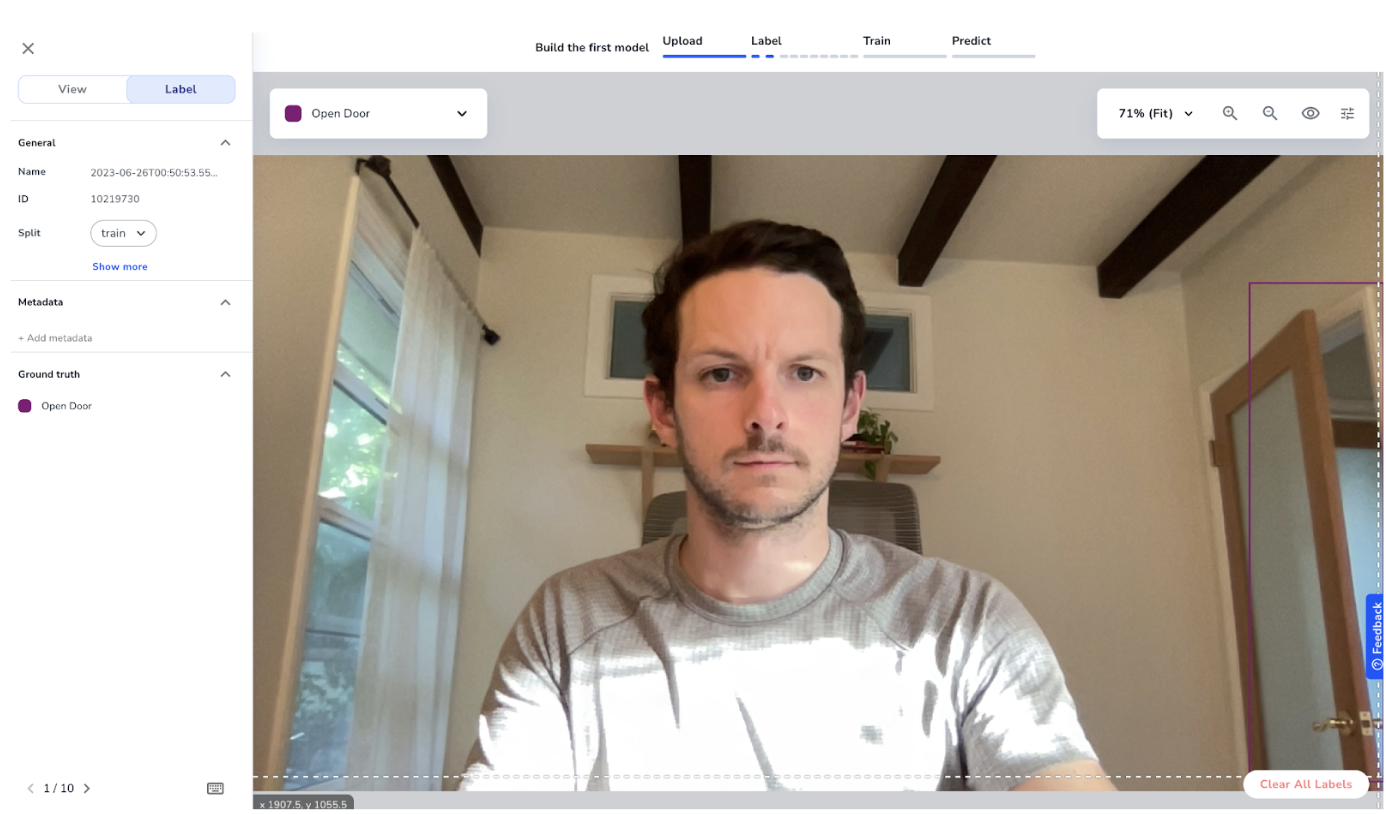

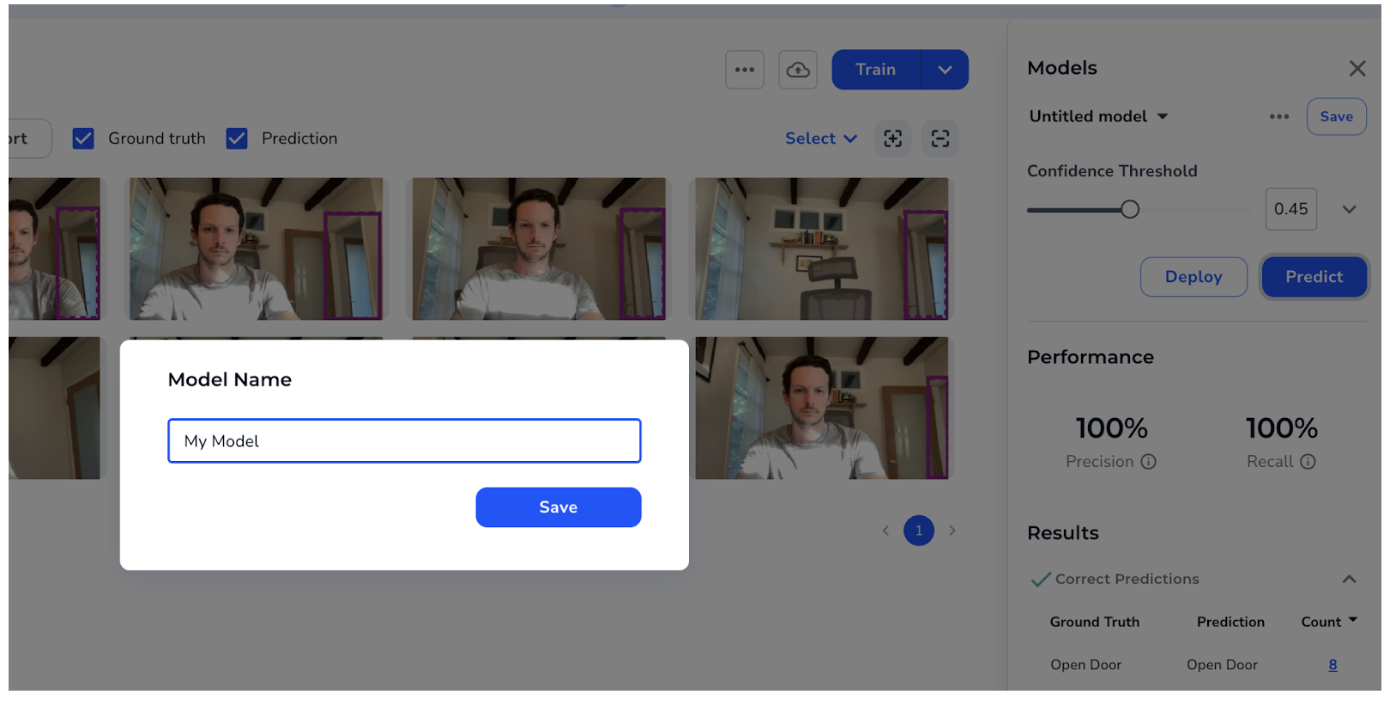

Build the Model

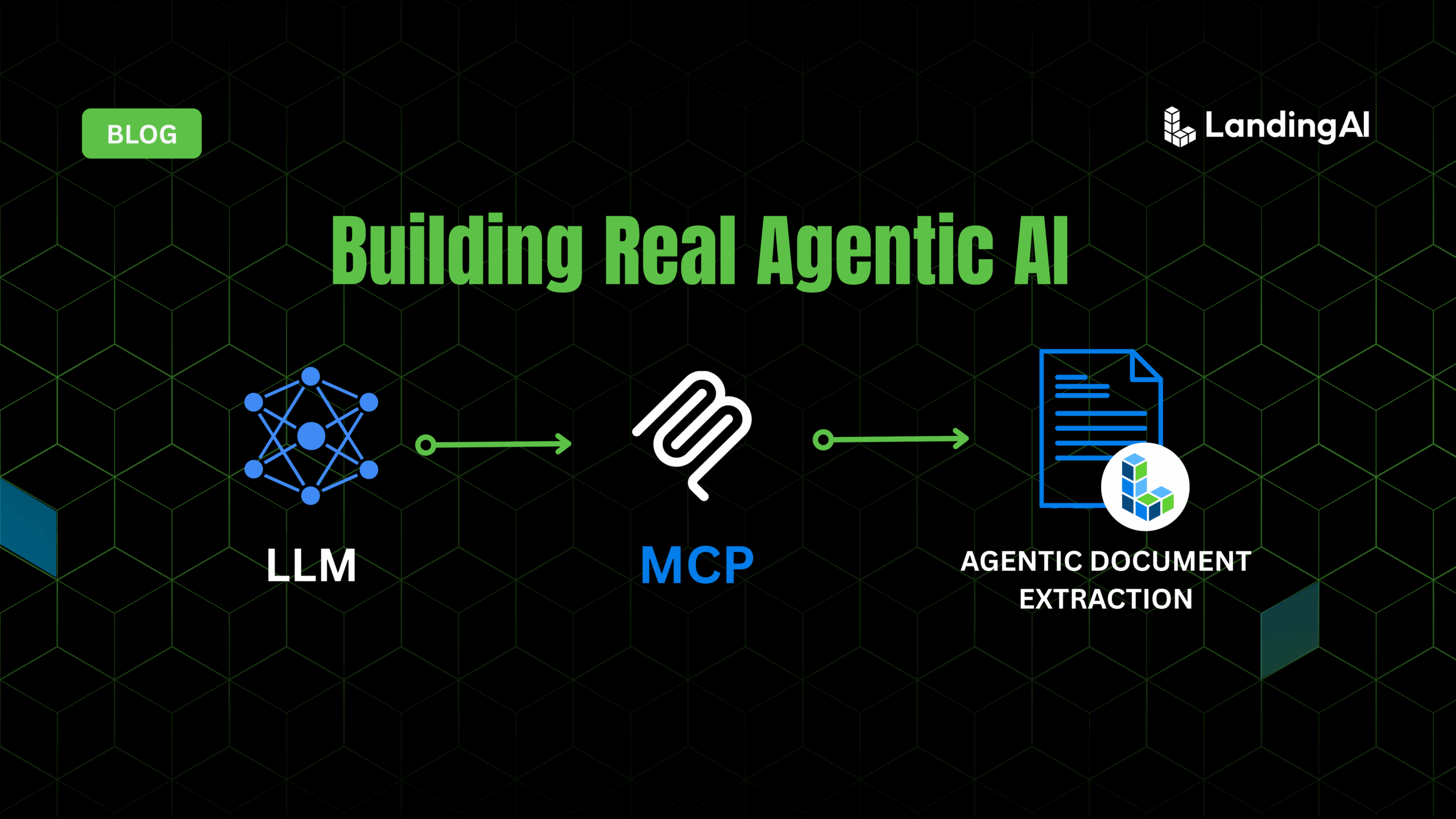

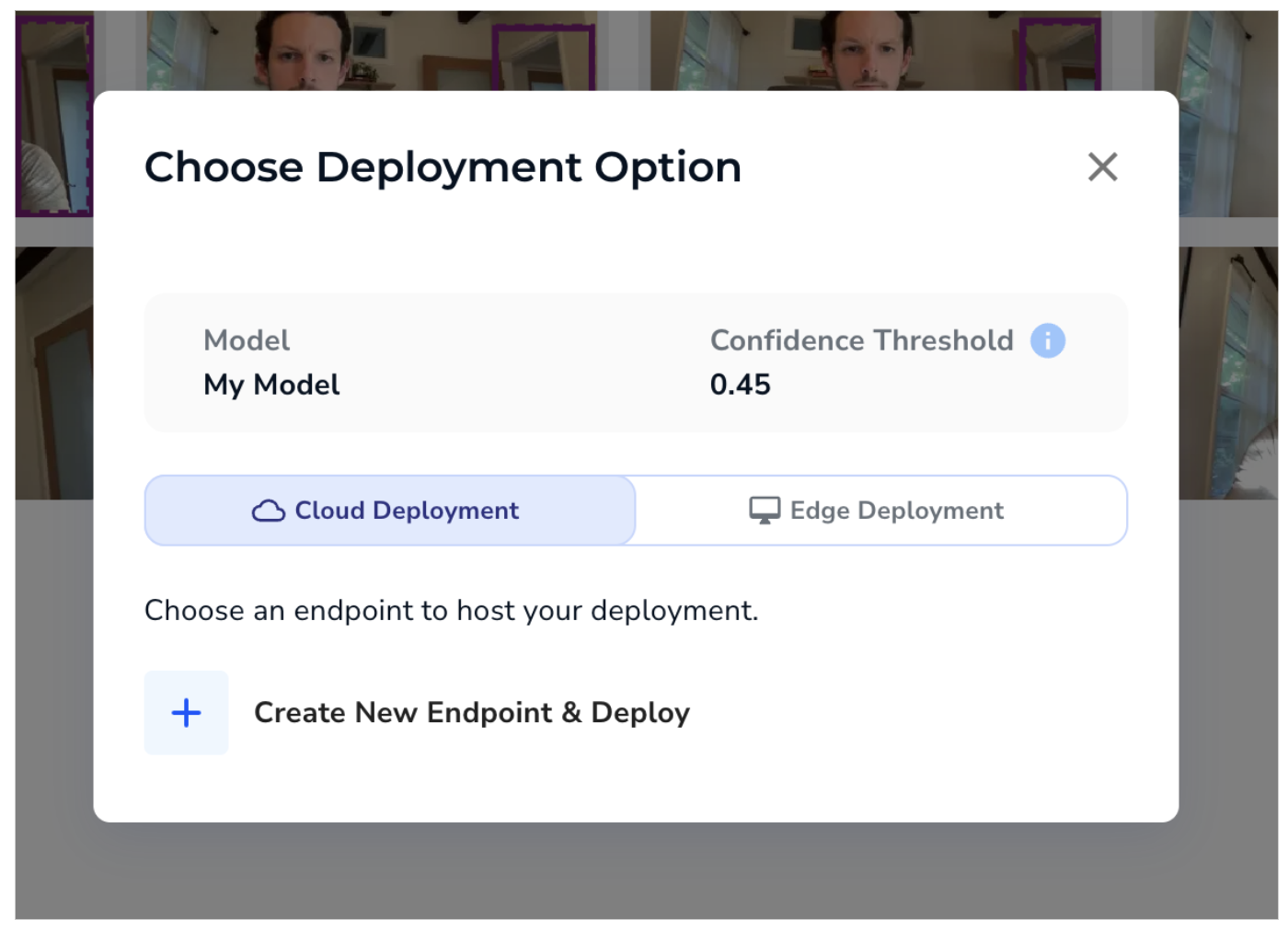

First, let’s start off by creating a new Object Detection Project in LandingLens and using the webcam to upload some pictures.

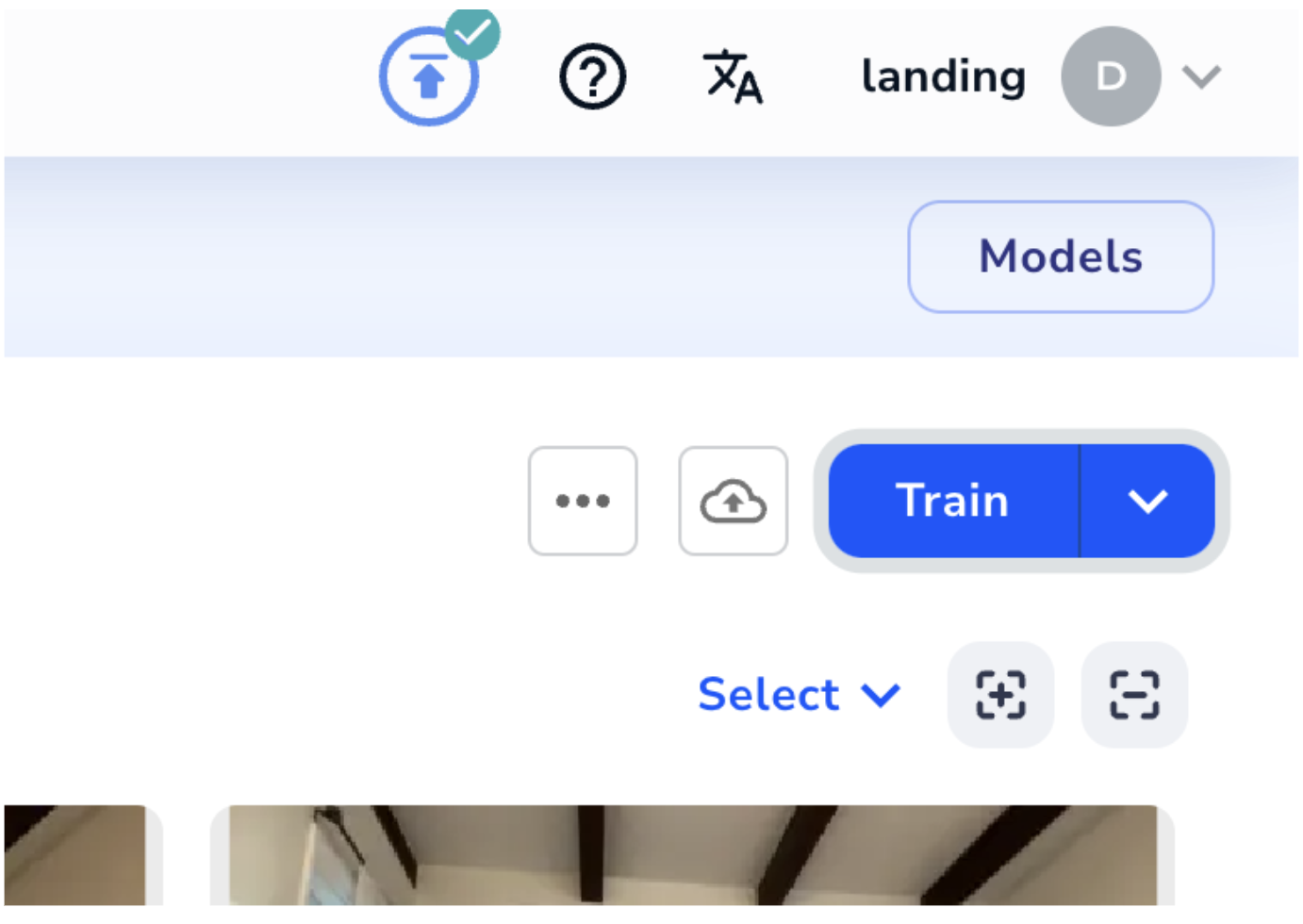

Call the Model From the Python Library

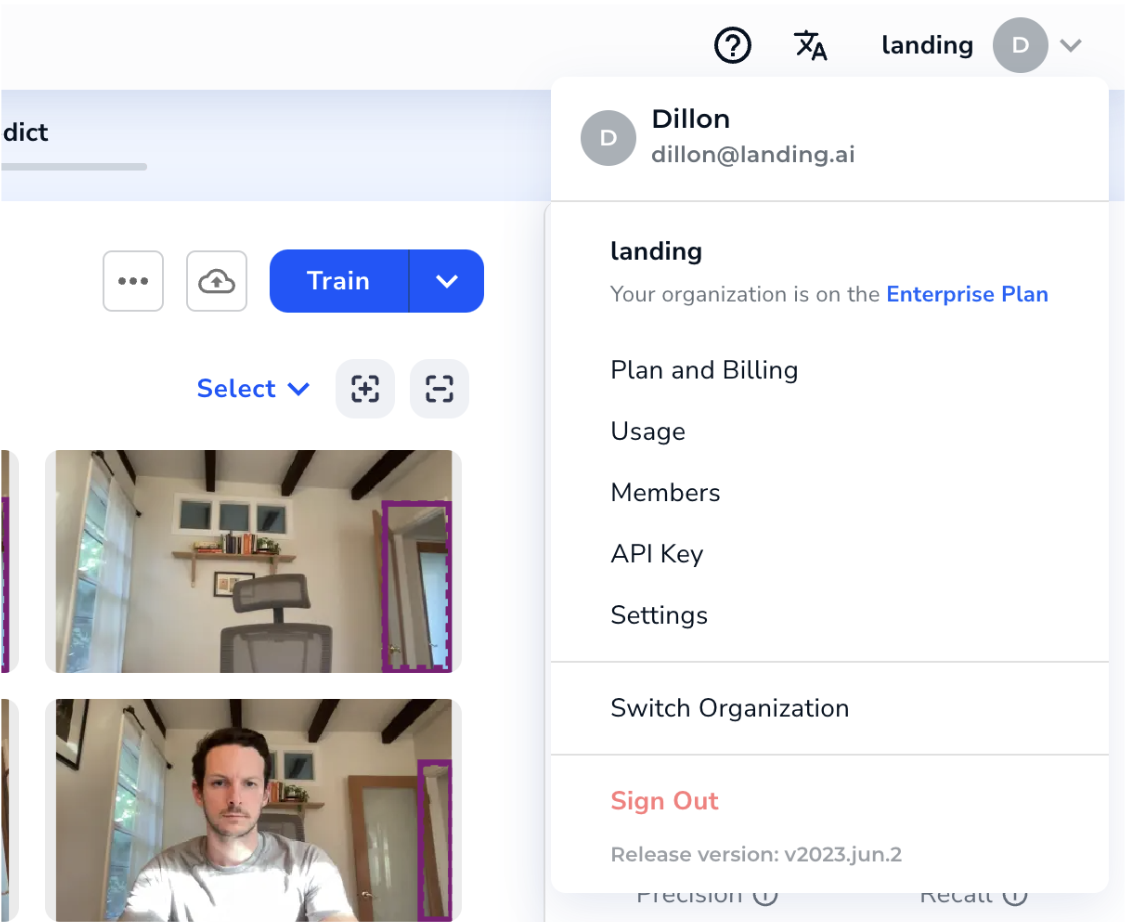

First you’ll want to generate an API key to run inference on the model. You can find this by clicking the User Menu in the top right corner and selecting API Key.

pip install landingai

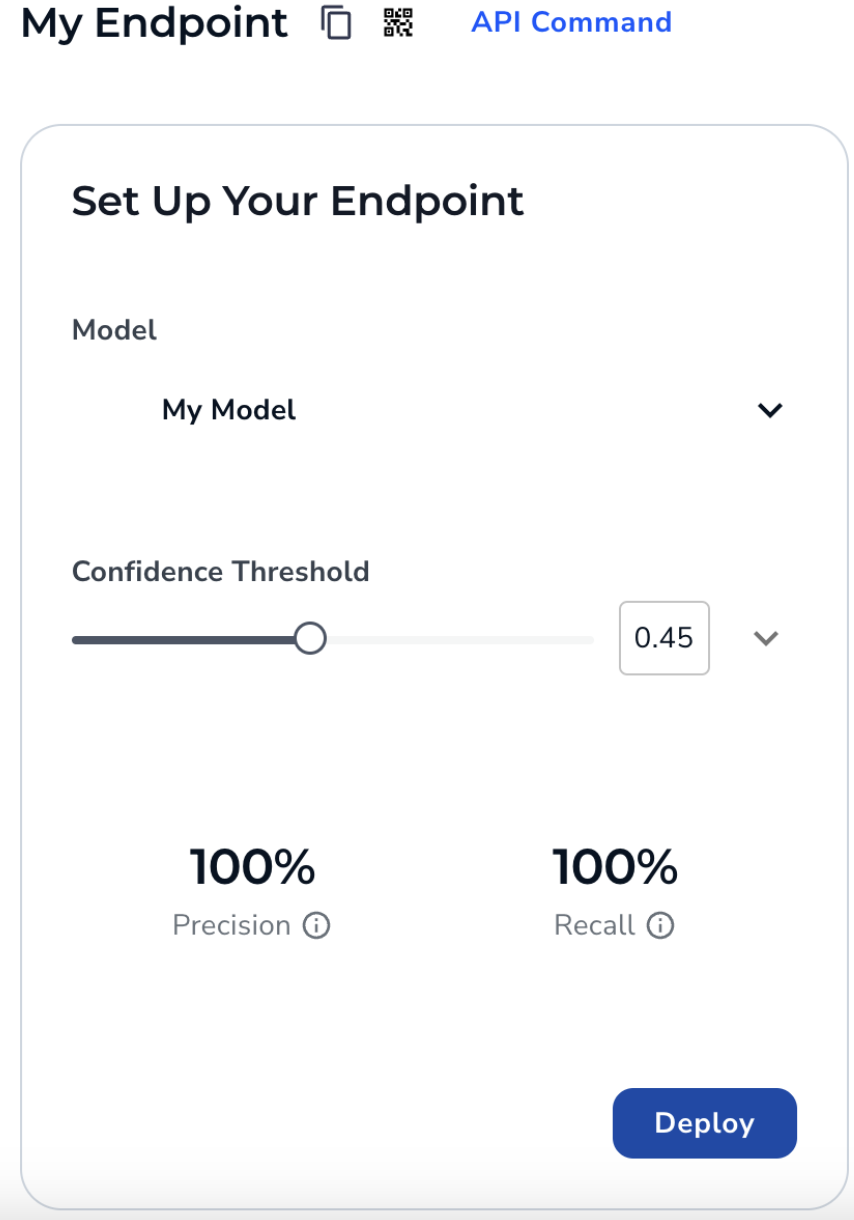

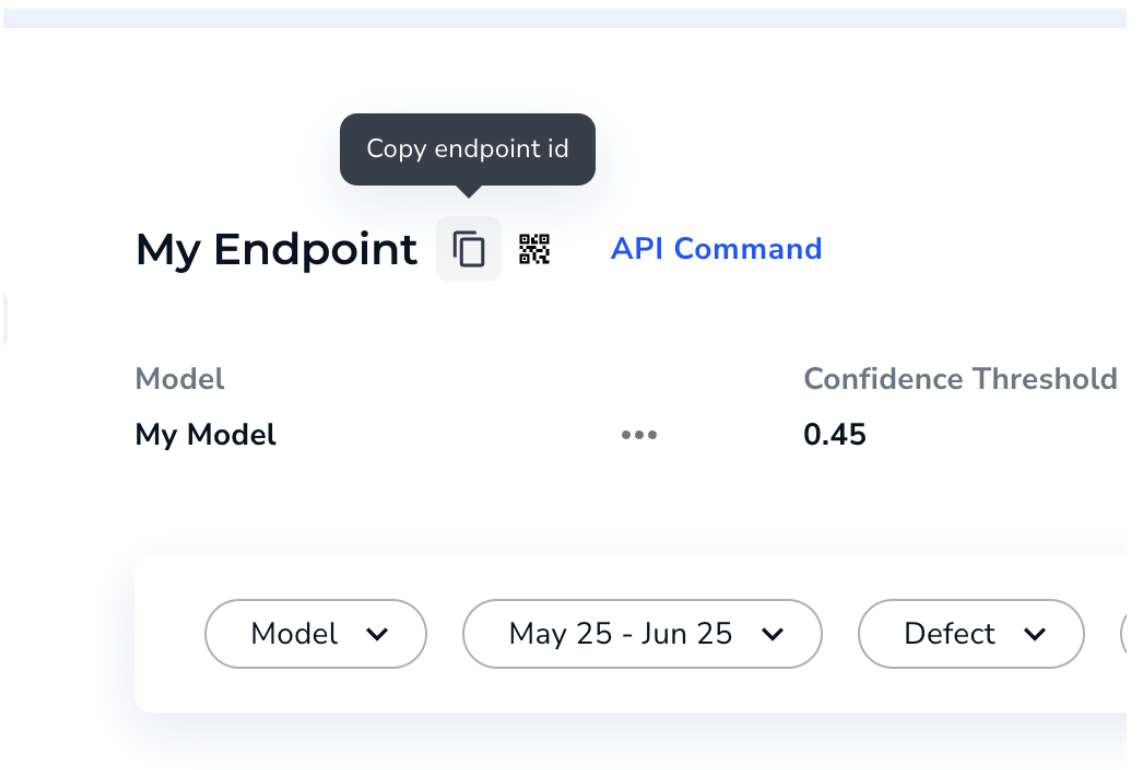

Now, you’re ready to use the Python Library! The main class you’ll be using is the “Predictor” class, which allows you to send images to your endpoint. The model then makes predictions on those images. You can create a Predictor class by importing it from landingai, and passing it the endpoint ID and API Key.

from landingai.predict import Predictor

endpoint_id = "place your endpoint ID here"

api_key = "place your api key here"

door_model = Predictor(endpoint_id, api_key=api_key)

Now, take a photo with your webcam (or download one of the previous webcam photos from the LandingLens project) and save it as “my_image.png”. We can open up the image using the Python Image Library (PIL)Image class. Then convert the image to a NumPy array to pass to the predictor. We can also import “overlay_predictions” to see the predictions on the image.

from landingai.visualize import overlay_predictions

image = np.asarray(Image.open("my_image.png"))

preds = door_model.predict(image)

image_with_preds = overlay_predictions(preds, image)

image_with_preds.save("my_image_with_preds.png")

You can save the image with the predictions overlaid as “my_image_with_preds.png” and view it on your computer

from landingai.predict import Predictor

from landingai.pipeline.image_source import NetworkedCamera

endpoint_id = "place your endpoint ID here"

api_key = "place your api key here"

door_model = Predictor(endpoint_id, api_key=api_key)

# 0 is the name of your webcam device

camera = NetworkedCamera(0)

for i, frame in enumerate(camera):

# only run for 5 iterations so it doesn't run indefinitely

if i > 5:

break

# the image_src="overlay" tells it to save the image from the

# overlay operation, not the original image

frame.run_predict(predictor=door_model).overlay_predictions().save_image(

filename_prefix=f"webcam.{i}", image_src="overlay"

)

And you can view the images!

Conclusion

In this tutorial, you learned to create a computer vision application using Python—from starting a new Object Detection project to training, deploying, and calling your model from the Python Library for integration into a real application. To learn more about the APIs, check out our GitHub page.