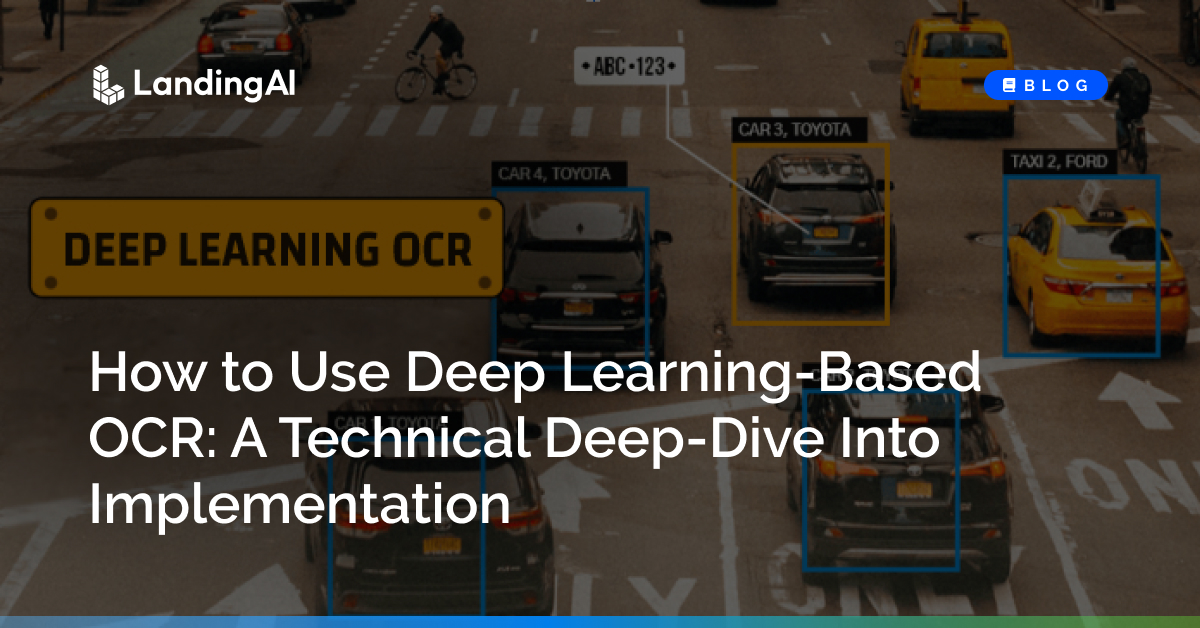

Businesses across diverse industries are increasingly adopting deep learning OCR and AI document recognition technologies to streamline processes, enhance efficiency, and improve accuracy.

Here we will cover:

- Introduction to OCR technology

- How to Implement an OCR AI Model Using Deep Learning – Step-by-Step Guide

- Installation and Setup

- Frame Extraction

- License Plate Detection

- Image Cropping

- OCR and Data Retrieval

- Conclusion

Introduction to OCR technology

Deep-learning-based OCR technology offers a powerful solution for many industries. It allows for streamlining operations, improving accuracy, and gaining actionable insights from unstructured data. As AI and machine learning continue to advance, OCR solutions—especially those built on deep learning—further optimize workflows and drive business value.

Some of the key benefits of a system that uses OCR machine learning and computer vision together include:

- Automation: Streamline the document processing workflow, reduce manual effort and enhance operational efficiency.

- Accuracy: Advanced deep learning algorithms ensure precise text recognition and data extraction, minimizing errors and enhancing data quality.

- Scalability: efficiently handle (or Efficiently process) a large volume of documents, adapting to the organization’s growth without sacrificing accuracy or performance.

- Cost Efficiency: By automating document processing, an organization saves time and reduces expenses related to manual data entry and error correction.

- Compliance: Enforce data validation rules and regulatory standards, ensuring compliance with relevant guidelines.

In this technical deep-dive, let’s explore a specific use case with LandingLens to better understand the practical application of OCR technology.

How to Implement an OCR AI Model Using Deep Learning – Step-by-Step Guide

In this tutorial, we guide you through the comprehensive process of building a computer vision application with LandingLens that detects and reads license plates from videos. Each section is crafted to provide you with a conceptual understanding of the step in the process and practical code examples. The process starts with frame extraction, followed by the detection and cropping of license plates, and finally, optical character recognition (OCR) for data retrieval.

By the end of this tutorial, not only will you have a functioning license plate reader, but you’ll also possess foundational knowledge and techniques that are transferable to a myriad of other computer vision applications. Whether you’re aiming to recognize faces, track objects, or read text from images, the principles and methods showcased here will serve as a valuable cornerstone for your future projects.

To try out this tutorial yourself, follow along in the Jupyter notebook here.

Installation and Setup

- Install the

landingaipython package. - We prepared a video clip with license plates from different cars on a street. Download the video clip to your local drive.

The video file will be downloaded at /tmp/license-plates.mov

!pip install landingai gdown

!gdown "https://drive.google.com/uc?id=16iwE7mcz9zHqKCw2ilx0QEwSCjDdXEW4" -O /tmp/license-plates.mov Frame Extraction

In this section, we’ll be extracting frames from a given video file. By reading the video frame-by-frame, we aim to save specific frames based on a set interval (e.g., every 100th frame) for further processing. This approach helps in reducing the computational load by processing only a subset of the frames instead of every single one.

from IPython.display import Video

Video("https://drive.google.com/uc?export=view&id=16iwE7mcz9zHqKCw2ilx0QEwSCjDdXEW4", width=512)

from landingai.pipeline.image_source import VideoFile

# Replace 'path_to_video_file' with the actual path to your video file

# video_file_path = '/Users/whit_blodgett/Desktop/Code/landing-apps-poc/license_plate_ocr_app/IMG_2464.MOV'

video_file_path = "/tmp/license-plates.mov"

video_source = VideoFile(video_file_path, samples_per_second=1)

frames = [f.image for f in video_source]

print(f"Extracted {len(frames)} frames from the above video")License Plate Detection

Once we have our frames, the next step is to detect license plates within these frames. We’ll be using a predefined API to help us detect the bounding boxes around the license plates. The results will be overlayed on the frames to visualize the detections.

from landingai.predict import Predictor

from landingai import visualize

def detect_license_plates(frames):

bounding_boxes = []

overlayed_frames = []

api_key = "land_sk_aMemWbpd41yXnQ0tXvZMh59ISgRuKNRKjJEIUHnkiH32NBJAwf"

model_endpoint = "e001c156-5de0-43f3-9991-f19699b31202"

predictor = Predictor(model_endpoint, api_key=api_key)

for frame in frames:

prediction = predictor.predict(frame)

# store predictions in a list

overlay = visualize.overlay_predictions(prediction, frame)

bounding_boxes.append(prediction)

overlayed_frames.append(overlay)

return bounding_boxes, overlayed_frames

bounding_boxes, overlayed_frames = detect_license_plates(frames)

# show 5 overlayed frames

for i, frame in enumerate(overlayed_frames):

if len(bounding_boxes[i]) == 0:

continue

display(frame)

Image Cropping

With the detected bounding boxes, we’ll be cropping the original images to isolate the license plates. This is crucial for ensuring the OCR model can read the license plate numbers and letters without unnecessary distractions from the surrounding scene.

from landingai.postprocess import crop

# cropping the license plate

cropped_imgs = []

for frame, bboxes in zip(frames, bounding_boxes):

cropped_imgs.append(crop(bboxes, frame))

print(len(cropped_imgs))

# show 5 overlayed frames

for i, cropped in enumerate(cropped_imgs):

if len(cropped) == 0:

continue

for plate in cropped:

display(plate)

OCR and Data Retrieval

In this section, we’ll pass the cropped license plate images through an optical character recognition (OCR) model. The job of the OCR model is to convert the image of the license plate into a string of text, allowing us to retrieve the license plate number.

from landingai.predict import OcrPredictor

# NOTE: The API key below has a rate limit. Use an API key from your own LandingLens account for production use.

API_KEY = "land_sk_WVYwP00xA3iXely2vuar6YUDZ3MJT9yLX6oW5noUkwICzYLiDV"

ocr_predictor = OcrPredictor(api_key=API_KEY)

ocr_preds = []

overlayed_ocr = []

print(cropped_imgs[0])

for frame in cropped_imgs:

for plate in frame:

ocr_pred = ocr_predictor.predict(plate)

ocr_preds.append(ocr_pred)

overlay = visualize.overlay_predictions(ocr_pred, plate)

overlayed_ocr.append(overlay)

print(ocr_preds)

for frame, ocr_pred in zip(overlayed_ocr, ocr_preds):

if len(ocr_pred) == 0:

continue

display(frame)

for text in ocr_pred:

print(text.text)

Conclusion

This use case can be used for enhanced parking lot management, toll collection, or even monitoring customer inflow in commercial areas. By harnessing the power of this OCR deep learning model, stakeholders can drive operational efficiency, enhance security, and deliver tangible value across multiple domains.

You can start building your own computer vision models for free on app.landing.ai and explore our GitHub repository for example projects.