Introduction

The first step in collecting training data for machine vision is to design your image acquisition system. The images you acquire for training and inference may be negatively impacted by a poorly designed imaging solution. On the other hand, a well-designed image acquisition system will boost your model’s accuracy and sensitivity. In this article, we explain how to design an image acquisition system solution with machine vision examples.

We will cover the following key factors for creating quality images needed for training your model.

- Lighting

- Background

- Field of View

- Exposure

- Depth of Field

- Color vs black and white

After collecting a set of images you may find that some are not optimal. Instead of discarding them and starting over you may be able to reuse them with some post-processing to alter the image. Then use the edited image for training. The result of completing this step is a set of quality images used for training your system.

Collecting Sample Data

As a first step we want to capture many samples showing the target object clearly in the image. Then we evaluate the images for how clearly they show the target object. This shortens the processing time for iterating through the data set, We want to build a lab environment to quickly test different factors that will affect the quality of photographic images.

One of the image solutions we designed was for detecting leaks in air compressors. They were pressurized and submerged into a tank of water. This created bubbles coming from any leaks. In this article we’ll use this example to discuss various aspects that affect the imaging solution.

Let’s start by discussing the factors that affect the quality of your images.

Environmental Factors Affecting Image Quality

The previous section discussed camera settings controlling the exposure. In the next section we’ll discuss factors that are outside of the camera. These are the environmental factors.

Lighting Equipment

Lighting is critical for successful imaging. If after adjusting the three exposure settings you do not have enough available light, then you have to look to the lighting equipment. Proper lighting is specific to the nature of the image analysis and depends on the specific environment and object(s) being captured. Lighting can highlight desired aspects or diminish unwanted ones. You will need to experiment to find the optimal lighting for your needs.

There’s a number of different lighting options such as direct, backlight, or diffused light.

- Direct light would be used to reveal the surface and create sharp shadows. Depending on the angle of the light to the angle of the camera, known as the angle of incidence, direct light can be used to enhance edges and texture.

- Backlight can highlight edges and separate an object from the background. Taken to the extreme, backlight can create a silhouette to reveal the object’s outline with no detail of its surface.

- Diffused light, or scattered light, reduces shadows. This would be effective for removing unwanted shadow textures that can interfere with capturing details on the object’s surface.

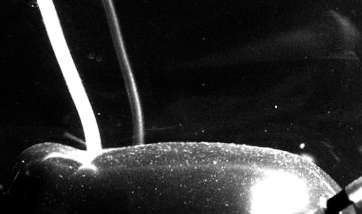

A dedicated article of lighting is needed to cover this topic adequately. Here we’ll cover just one example of how lighting affects image quality. In our leak detection, proper lighting would highlight the bubbles and help separate them from the background. Below is a comparison of images under different lighting conditions.

low-level-light

mid-level-light

high-level-light

Background

Inside a photography studio, you typically see subjects in front of a pure white or black background. This hides distractions and separates the subject. This sample principle applies to machine-based visual inspection. We want a distraction-free background. Then human workers, as well as the AI models, can give their attention to the important areas and therefore more likely to catch defects.

In our air compressor example the back of the inspection tank was a glass wall. That allowed inspectors to see the back of the compressor, but this also created a problem.

The glass wall reflected anything in front of the tank, such as lights, people, and other bright objects in the room. These unwanted objects in the image distracted the model. In the image below you can see the reflection of two tubes.

Reflective background

A simple fix was to add a black background. This prevented the reflections. Additionally this increased contrast and that helped to highlight the bubbles.

Look at the image below. You can easily find the small bubbles when using a black background. Now look back at the image above and notice how much harder it is to spot the bubbles.

Black background

Field of View

The lens focal length and image sensor size determine the physical area of the subject that can be captured. In other words, how wide of a shot can be obtained. Wider lenses and large sensors can capture a larger field of view. Increasing the distance from the subject to the camera can increase your field of view.

The image’s resolution will be determined by the size of the camera sensor and the field of view. Higher resolution can increase the accuracy of the subject.

In our example we need to capture an image of sufficient resolution that includes the entire air compressor and some distance above it for the rising bubbles.

Control Exposure With Camera Settings

The amount of light captured by a camera’s sensor is referred to as the exposure. There are just three factors that determine the exposure. If one factor is too low, we can increase it by trading that for another. The three main camera settings controlling exposure are:

- Shutter speed – adjusts the amount of time the sensor gathers light

- Aperture – adjusts the amount of light that passes through the lens

- Sensor sensitivity – adjusts the sensor’s sensitivity to light, measured as ISO

Each factor also affects different qualities of image capture. This is another dimension of the art of photography. Unlike art, machine vision isn’t about creativity. The goal is to obtain the best quality image. Here’s the trade off to consider when adjusting the exposure.

- Shutter speed – faster speeds are needed to freeze motion but reduces the amount of light reaching the sensor

- Aperture – wider settings will increase the amount of light on the sensor but reduces the depth of field

- Sensor sensitivity – higher levels of sensitivity will increase noise

If you need to increase the shutter speed, or exposure time, then to compensate for the lost amount of light you need to increase one or both of the other factors. You have to find the right balance of all three for the best image.

Shutter Speed

With low levels of light, we can extend the exposure time, or shutter speed. This comes with a tradeoff.. You may have seen photos of fast moving objects such as a race car using a long exposure time, or slow shutter speed. The object looks smeared in the image. This effect is called motion blur. While this might look exciting for photos of cars during a race, it’s bad for machine vision.

In the following examples of a spinning pinwheel, each of which are spinning at the same speed. The exposure time ranges from slow to fast. The amount of the light coming into the camera is kept the same by adjusting the aperture and camera’s sensitivity. As you can see the faster exposure time appears to freeze the motion, producing a sharp image.

Different exposure times of object in motion

Aperture

The lens’s aperture controls the depth of field, or DOF. This is the distance from the nearest to the furthest object that is sharp in the photo. Objects outside of the DOF will be less sharp. Increasing the DOF reduces the amount of available light reaching the sensor.

The ideal setting is one that has all the target objects within the DOF, but no more. This way we can optimize the amount of light in the exposure. We increase the DOF by reducing the aperture size. This lowers the amount of light in the exposure. To compensate for this we can increase the sensitivity at the sensor, the ISO setting.

In our example of detecting rising air bubbles, increasing the DOF makes our model more accurate at detecting any bubbles rising from the front or back of the compressor.

Sensor Sensitivity

The sensitivity to light in a camera can be adjusted. This is the ISO value. Higher values mean higher sensitivity. But higher sensitivity comes at a cost. It introduces digital noise. These are unwanted artifacts in the image. You adjust the ISO in relation to shutter speed and aperture settings. Just be cautious you are not allowing noise in the images to affect your model.

Color or Monochrome

There is one more camera setting that might be a consideration. Monochrome, or black and white, images have the advantage of being more efficient at capturing lower levels of light. If color is part of your image analysis, such as spotting a red object, then obviously you have no choice but to capture in color.

Low levels of light can introduce color artifacts of digital noise and chromatic aberration. Using monochrome images we get increased brightness with less noise.

Reusing Images for Training

If we’ve changed camera settings or the environment, after training our models we need to retrain. However, don’t throw away the old data! You may be able to reuse those images. For example, if the originals were in color and you’re switching to monochrome, convert the originals to grayscale. There are many software applications for photographers that will make this conversion easy and perform it on a folder of images in one batch.

Once the old images are converted they can be reused in model training, probably with lower weights during backpropagation. This old data, as generated in various production settings, is very good for data augmentation and makes the output model more robust.

Build a Test Environment

In addition to the background and lighting, there are other factors that could affect visual inspection tasks. In our example this would include water condition and air quality. Experimenting with those factors in a real production environment may be time-consuming and may not be feasible.

We recommend that you set up a lab that mimics the production environment and run controlled experiments. Test both qualitative or quantitative evaluations. Then, once you find a better environment setting, generalize and apply it to the production environment. This method will help you quickly iterate without disturbing the production environment.

Conclusion

In this article, we discussed how to design image acquisition systems and the key factors that will affect machine vision. We explained the basic controls that determine exposure and how to improve the physical environment.

You may wonder why so much effort should be spent setting up an imaging solution. Through our experience, we have found that an optimal camera configuration has a critical impact on the project’s overall success.

We’ve encountered scenarios when in the middle of our model iteration, we would find our model’s performance negatively impacted by the input imaging quality. This meant going back and readjusting cameras and/or the environment. Every time we make such a change, the data distribution changes. This in turn forces us to collect new data and retrain the model. This process is costly.

Therefore, we strongly recommend that you spend more effort in the beginning of the AI project. Tune the camera and environment through multiple experiments. Better images means there’s less chance of having to start over.

Key Takeaways

- Quality of images needed in model training is controlled with lighting, background, and camera settings.

- Experiment to determine the best image acquisition solution.

- You may be able to reuse existing images with post-processing.