Surfer Counter

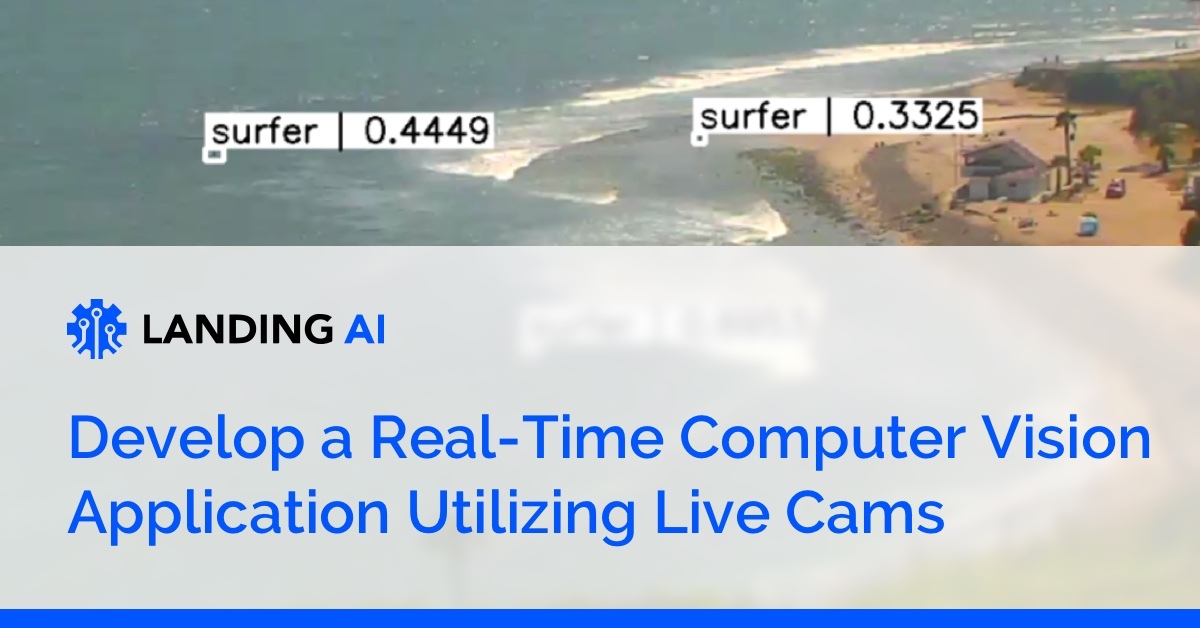

This tutorial shows you how to use LandingLens to build a machine learning model that counts the number of surfers at a surf spot via a live stream surf cam. If you’re a surfer, this helps you find out how crowded a particular spot is so you can avoid the crowd! We’ll use the landingai-python library to run a deployed model on the live stream to get real time updates.

Collecting Data

First let’s collect some data. For this we’ll use the Topanga Surf Cam, which can be found here.

We want to download frames from the video stream so we can label them for training a model. This is easy to do with the landingai-python library! Just pass the video stream playlist file (the URL is provided in the code below, to find out more about the playlist files check this website out), and save the frames.

And that’s it! If you are interested, you can easily save a video file instead of frames as well by calling the following method on your FrameSet

Labeling and Training

There are several different zooms, the more zoomed out image is more difficult to see the surfers so make sure to capture images from several zoom levels.

The surfers are fairly small in the image, if you cannot tell if it’s a surfer just don’t label it for now to avoid confusing the model. Later on we will average the surfer count from many predictions to get a more accurate estimate.

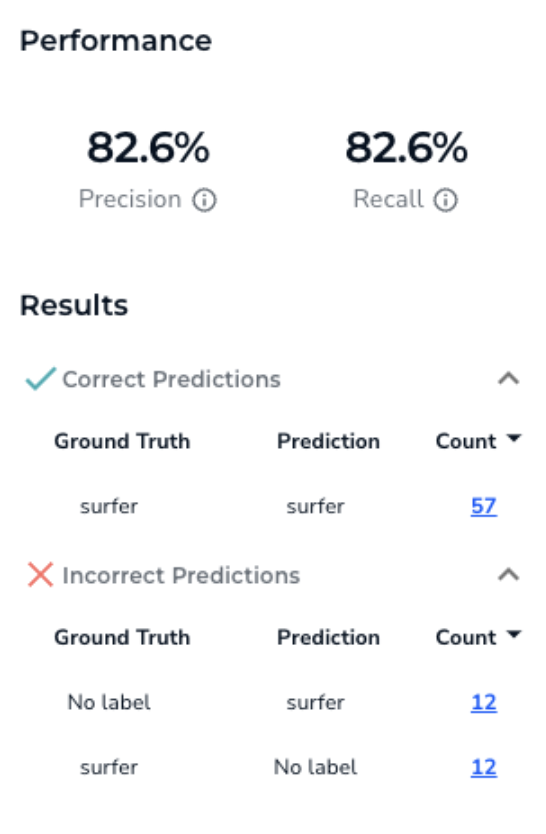

Once you’ve labeled about 10, hit the train button! On our first run you can see we don’t get great performance:

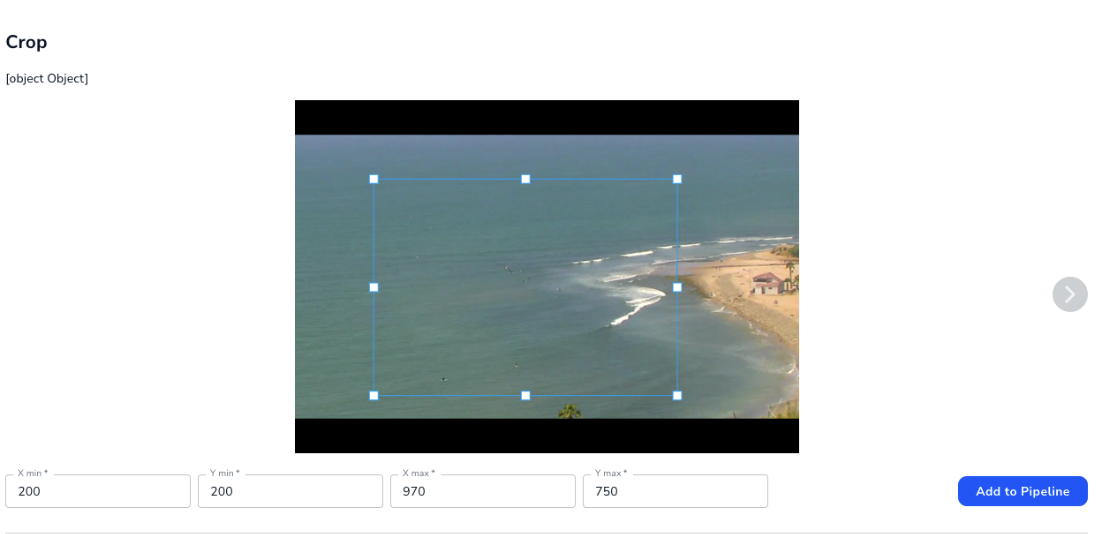

You can use the advanced training option to further improve the performance. You can find it by clicking on the down arrow right next to Train. First, we know the image is fairly large, and most of the image is not useful for predicting surfers. So let’s add a crop to crop out the area we want to look at:

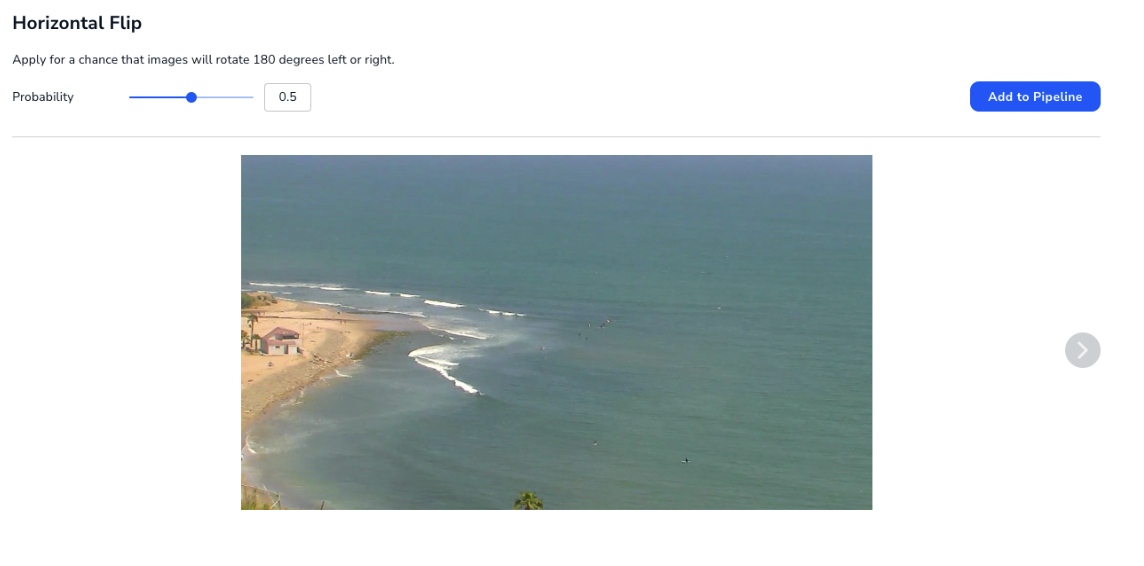

Then let’s add some augmentations. Horizontal flip because surfers can be facing left or right (probably not vertical flip because the surfers would not be upside down):

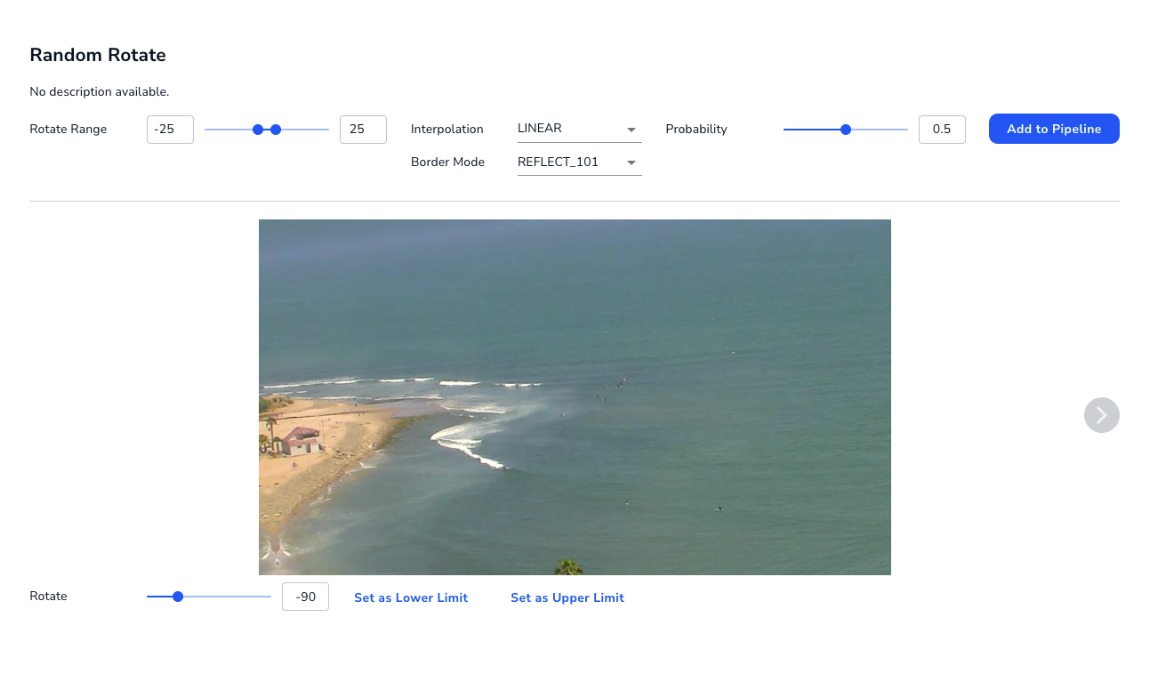

A slight amount of rotation:

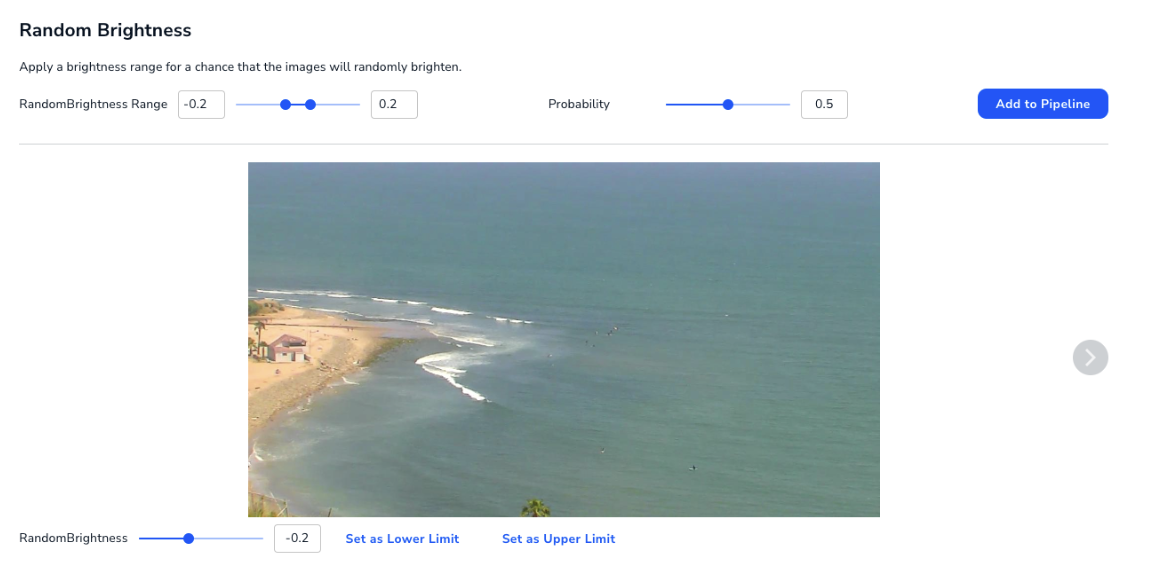

And some random brightness to simulate different lighting conditions:

This helps improve the results significantly to:

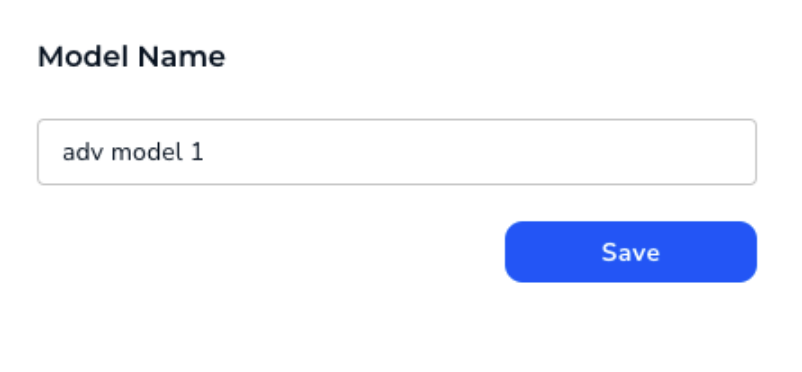

We can hit save and call it “adv model 1”

Deployment and Inference

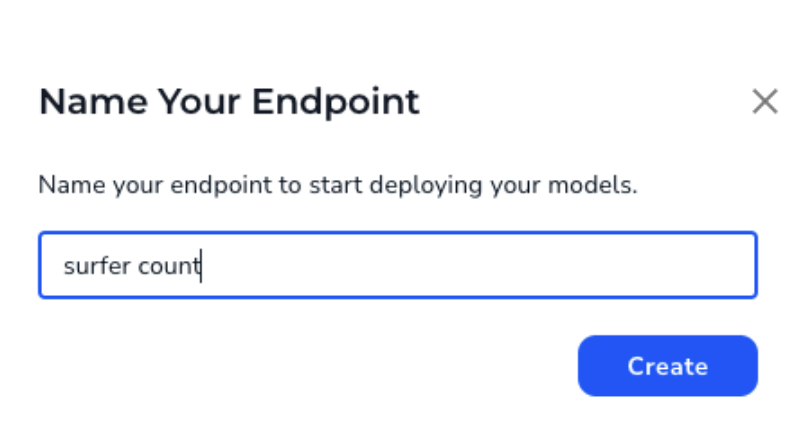

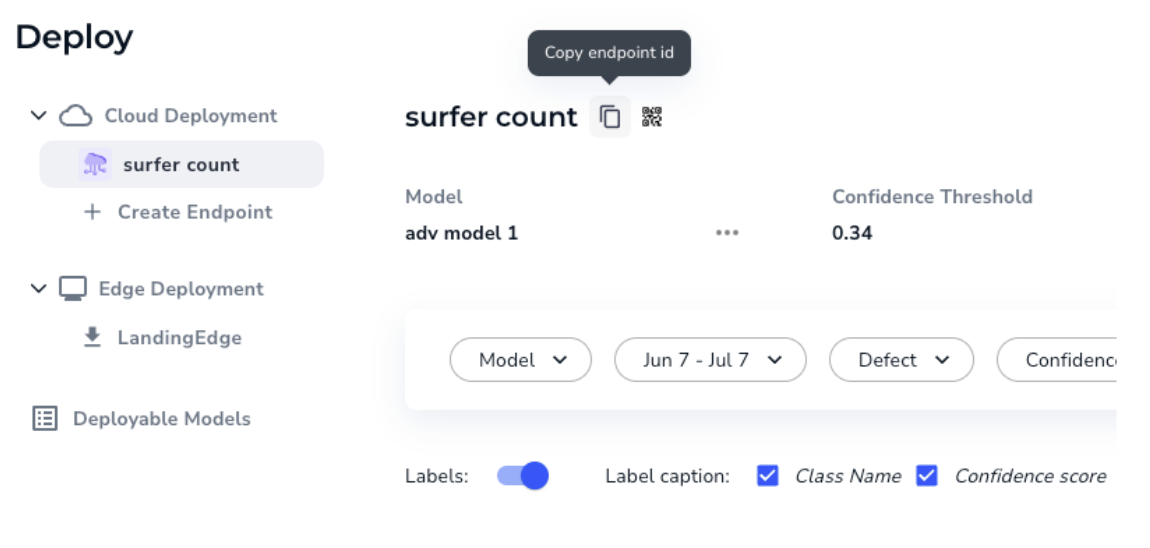

Now let’s deploy the model and test it out. You can hit the deploy button, deploying to the cloud and creating a new endpoint, we’ll call it surfer count.

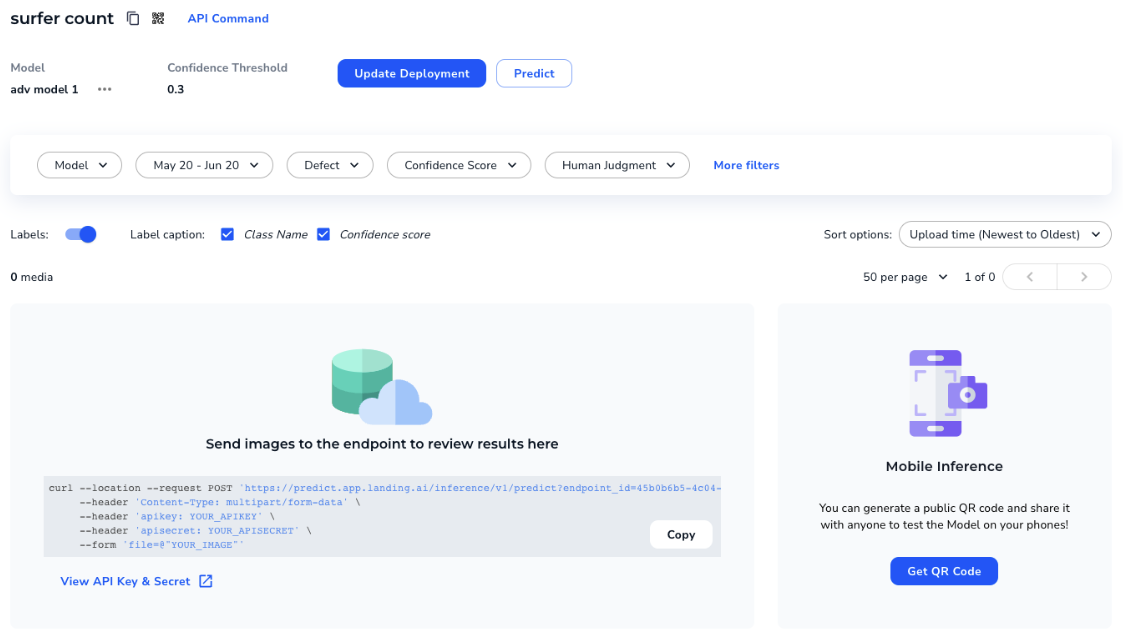

And then hit deploy once more to deploy “adv model 1” on the surfer count endpoint. You should see a page like this:

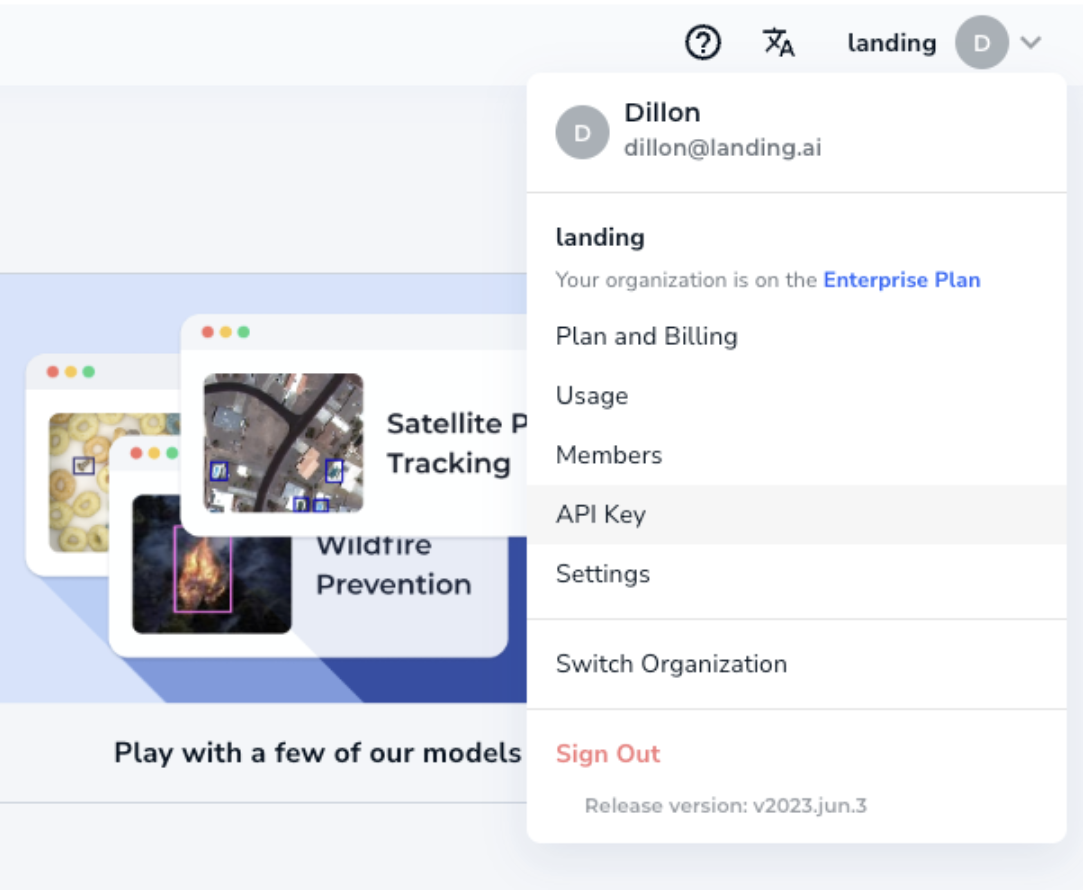

Make sure you have your API key, which you can find in the drop down menu on the top right, or by following these instructions.

You can find your endpoint ID by going to the Deploy page and clicking on the copy icon:

To save the video and view it you can then just run:

If you zoom in, you can see the model actually misses the group of surfers. But that’s okay! It’s very typical on your first deployment to get bad performance, all you need to do is upload more data and label it to improve the model. You’ll likely have to do this as conditions change, for example the wind picks up and there’s more white caps, or the sun is at a different angle and changes the reflection on the water, etc. After a few more training runs you can get better performance:

Putting It All Together

To get the final count of surfers we can call the get_class_counts method and average over the number of frames:

And we get 4.27!

Going through all the frames there’s a few frames where it picks up a surfer prediction where there is not any. Adding more training data will help reduce these false positive predictions.

Conclusion

We showed how to collect data from a live stream surf cam, train and iterate on an object detection to detect the surfers and finally how to deploy that model and call it using the landingai-python library. As a next step you could train this app on several surf locations to quickly scope out which ones are busy, or you could track a location over time to see when it’s most or least busy. Begin building your model for free on landing.ai. Take a deep dive into Python Library and create an application of your own!