Effective deployment of deep learning models is a critical yet often overlooked aspect of building scalable AI solutions. It is more than just a technical task—it’s a strategic necessity. A well-executed deployment ensures that models run efficiently, are scalable, and integrate seamlessly with existing systems. Poor deployment can result in performance bottlenecks, security vulnerabilities, and integration issues that hinder the model’s effectiveness in accomplishing the desired goals.

LandingAI users have been leveraging OCR implementation via the LandingLens API for tasks like text recognition from images and document digitization. Continuing our commitment to make deployment more flexible for varying enterprise needs, we are launching an Optical Character Recognition (OCR) model through Docker and LandingEdge. This OCR Docker integration enables enterprises to deploy OCR models securely and efficiently within their own environments, mitigating deployment risks and optimizing the model’s performance in real-world applications.

In this blog, we will explore how users can implement LandingLens OCR capability via Docker to streamline text recognition workflows at scale.

[Note] LandingLens Enterprise plan is required to implement OCR through Docker and LandingEdge. Contact us to learn more about the LandingLens Enterprise plan.

Why Use Docker for OCR Deployment?

Docker deployment leverages containerization, providing a consistent and portable way to run applications, including Visual AI models. Containers package the application with all its dependencies, including the operating system, environment variables, and application dependencies, ensuring that it runs uniformly across different environments. Thus, your system could be running multiple deep learning applications without affecting each other.

This approach is especially useful for real-world use cases where organizations need reliable OCR deployment and want to demonstrate exactly how to extract text from an image using AI-powered models. Here’s when Docker deployment is ideal for OCR:

Here’s when Docker deployment is ideal for OCR:

- For Consistent Environments: Docker ensures that your model runs consistently across different environments, from development to production, without worrying about underlying infrastructure variations.

- When You Need Super Low Latencies: Docker is useful when every millisecond of performance matters. Running Docker on local machines reduces network overhead for data transfers and retrievals.

- When Scalability Is Key: Docker makes it easy to scale deployments horizontally by quickly spinning up multiple containers to handle increased load.

- In Network-Constrained Environments: If internet access is limited or unreliable, Docker allows you to deploy models locally, avoiding reliance on cloud services.

- When Security Is a Priority: Docker provides isolated environments, enhancing security by preventing potential conflicts with other applications and protecting your model.

Workflow: Run OCR on Docker

This example guides you through a few simple steps to start using OCR with Docker:

1. Install Docker and Python

- Download and Install Docker: Download Docker Desktop for your operating system (Windows, macOS, or Linux).

- Set Up Docker: Follow the installation instructions and ensure Docker is running correctly on your machine. You can verify this by running

docker --versionin your terminal. - Install Python: Running inference with Docker requires Python. Install Python if you don’t have it yet.

2. Obtain Your LandingAI OCR Activation Key:

- Retrieve Your Key: Using OCR in Docker requires an activation key. If you’ve purchased the OCR add-on and need your activation key, please contact support@landing.ai.

3. Pull the Docker Image and Launch Docker Container:

- Open Terminal: Access your terminal or command line interface.

Run the Pull Command: Use the following command to download the LandingAI OCR Docker image. OCR is available in LandingAI Docker v2.9.1 and later:

docker run -e LANDING_LICENSE_KEY="your_activation_key" -p 8000:8000 public.ecr.aws/landing-ai/deploy:latest run-ocr

Replace your_activation_key with your actual OCR activation key.

- Now, you can run inference in your Docker Deployment container using the LandingAI Python API.

5. Verify the Container is Running

- Check Status: Ensure the container is running by running this command:

docker ps - Confirm Port Mapping: Verify that port

8000is mapped correctly, as this is where the OCR service will be accessible.

You can find more information on using the LandingAI Docker solution here: Deploy with Docker.

Make Inference Calls with LandingAI OCR

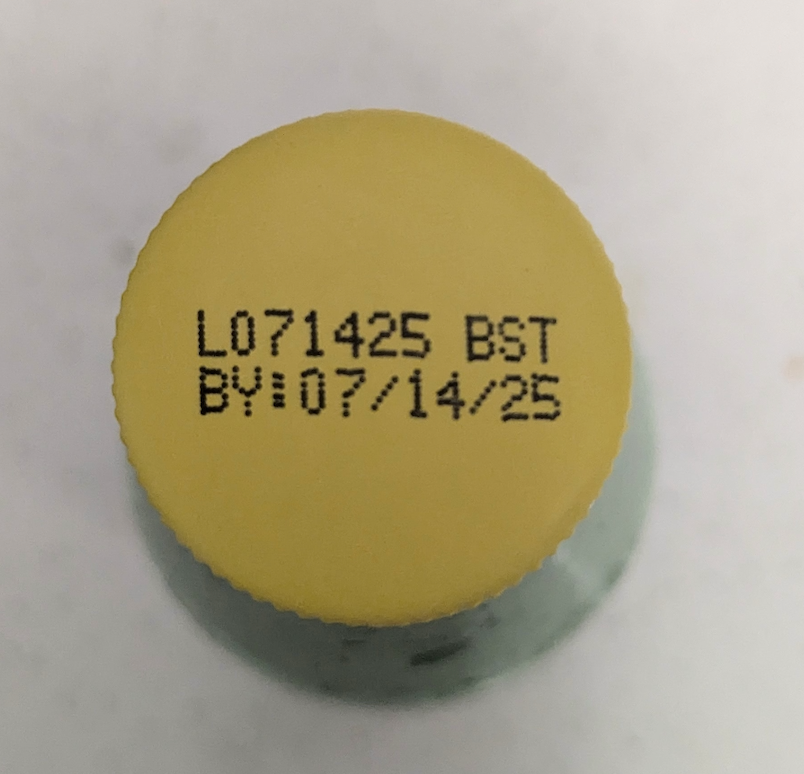

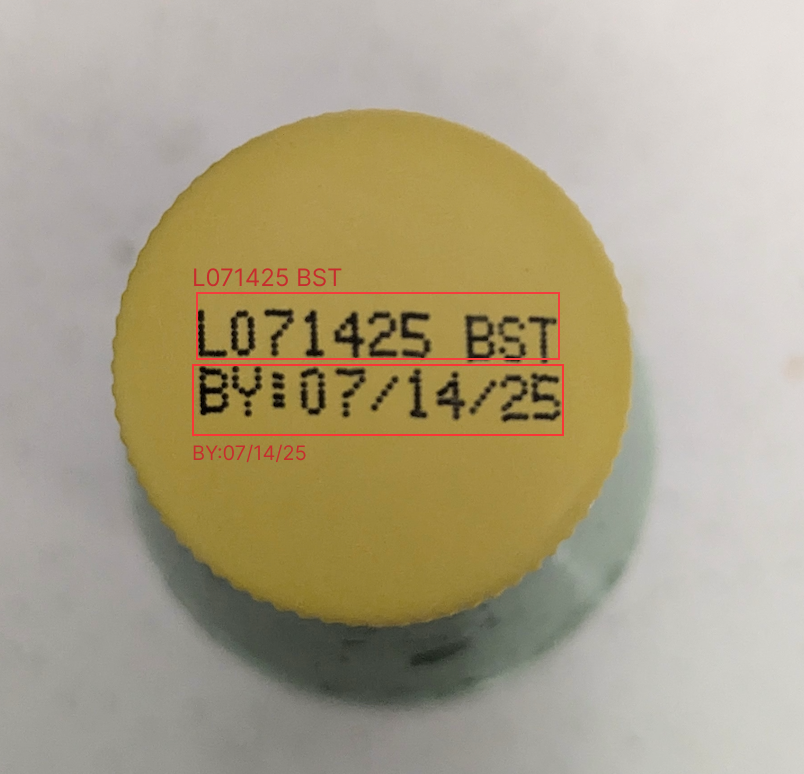

Once the Docker container with the LandingAI OCR model is up and running, you can use the model to perform inference on images. As an example, let’s use an image of a printed label where the goal is to find the “best before” date of the product.

With only a few lines of code, you can start running inference. The following script sends an image to the OCR Model, processes the response, and extracts the detected text.

import requests

# Define the OCR service URL

ocr_url = "https://localhost:/images"

# Path to the image you want to analyze

image_path = "your/file/path"

# Open the image file in binary mode

with open(image_path, 'rb') as image_file:

# Prepare the request with the image file

files = {'file': image_file}

# Send a POST request to the /images endpoint

response = requests.post(ocr_url, files=files)

# Print the response status and text

if response.status_code == 200:

# Parse the JSON response

result = response.json()

# Extract text from predictions

predictions = result.get('predictions', [])

#JSON output

print(predictions)

else:

print("Error:", response.status_code, response.text)

Here’s the JSON output of the OCR model’s prediction:

[

{'text': 'Best By: 06/17/2026', 'score': 0.97393847, 'location': [

{'x': 193, 'y': 438

},

{'x': 194, 'y': 386

},

{'x': 485, 'y': 391

},

{'x': 484, 'y': 443

}

]

},

{'text': 'C101 06172402: 14', 'score': 0.96005297, 'location': [

{'x': 189, 'y': 475

},

{'x': 192, 'y': 426

},

{'x': 485, 'y': 440

},

{'x': 483, 'y': 488

}

]

}

]

For the example use case, we will update the code to extract the specific text after the “Best By:” characters:

import json

# Define a function to extract text after "Best By:"

def extract_best_by_date(texts):

for text in texts:

if "Best By: " in text:

# Find text after "Best By:" and strip any leading/trailing

return text.split("Best By: ")[-1].strip()

return "Date not found"

# Extract specific text after "Best By:"

best_by_date = extract_best_by_date(extracted_texts)

print(best_by_date)

else:

print("Error:", response.status_code, response.text)

OCR Result:

Script will only output the text we were looking for: 07/14/2025

Done! We have successfully set up a Docker container and used the LandingAI OCR model to run inference on an image!

Conclusion

By running the LandingAI OCR model in Docker, users can easily perform text extraction tasks such as detecting a product’s “best before” date. For more complex cases where the text’s location varies, using a combination of LandingLens computer vision models, such as object detection and the OCR model, offers even greater precision and automation capabilities. This approach supports a wide range of real-world use cases that require text recognition from images, from labeling and packaging to documentation and compliance. This setup is ideal for use in both development and production environments, ensuring consistency and reliability across different deployments.

Start for free at app.landing.ai and check out more information on OCR and Deploy with Docker.