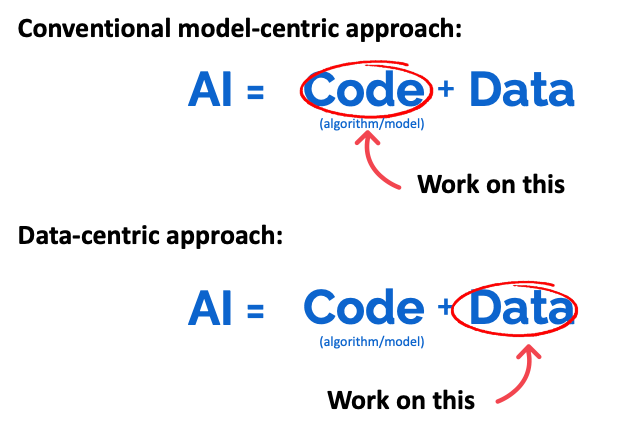

AI systems are built using code (which implements a learning algorithm) and data (used to train the system). For many years, the conventional approach in AI was to download some dataset and work on the code. Thanks to this paradigm of development, for many applications today, the code aspect of an AI system is mostly a solved problem. You can download a model from GitHub that works well enough for your project. Rather than spend time on the algorithm, in many instances it’s now more useful to work on the data — by systematically iterating on the dataset using data-engineering processes and principles.

Data-Centric AI is an emerging technology approach in AI. The evolution of a new approach typically begins with a handful of experts performing the techniques intuitively. When these experts discuss and publish their ideas, these principles become more widespread and finally, tools are developed to make their application more systematic and available to everyone.

In the case of Data-Centric AI, we are now firmly in this second phase where these principles are becoming more widespread, and more people have the mindset of applying a systematic method of working with data. I started to talk about Data-Centric AI in a YouTube video on March 24, 2021 and since then I’ve noticed the phrase popping up on more and more corporate websites. I’m hopeful for these companies, and many others, to create tools that could enable many people to apply these ideas in a more systematic way.

I would like to share with you my top five tips for Data-Centric AI development with data labeling examples so you can apply these principles yourself. These tips use vision applications as a running example, but are more broadly applicable to many other unstructured data (audio, text, etc.) applications as well.

Tip 1: Make the labels y consistent

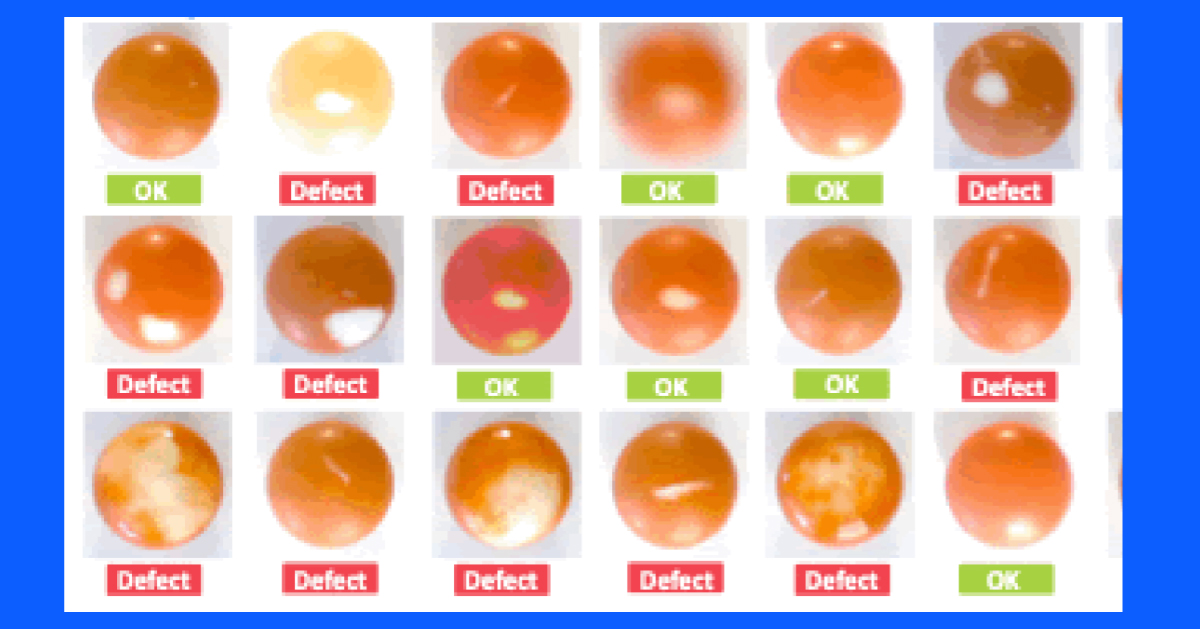

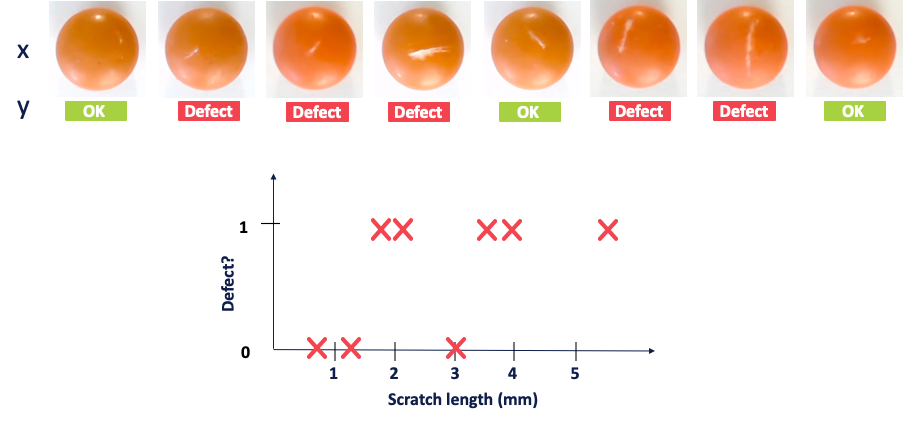

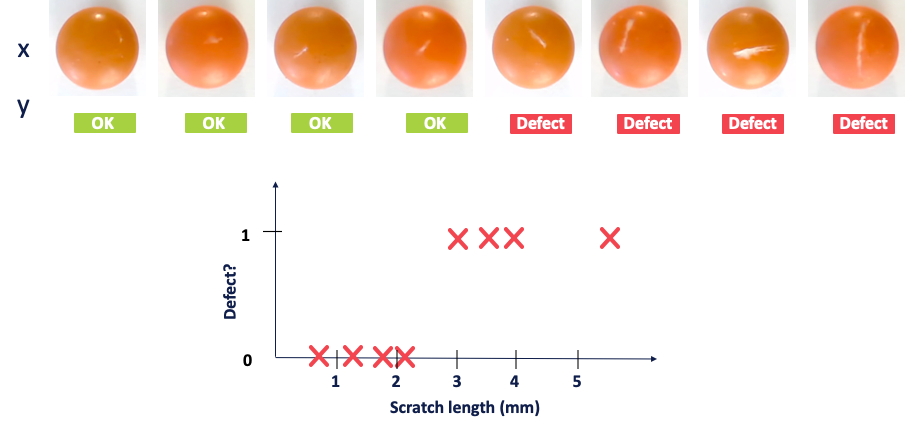

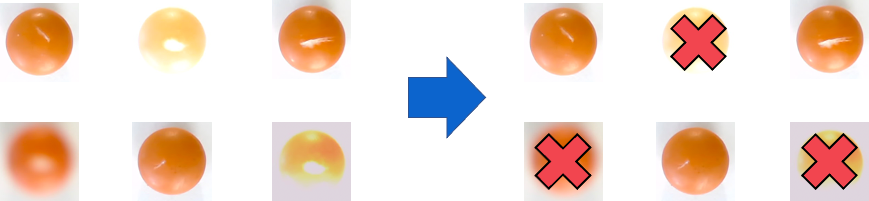

It is much easier for a learning algorithm to learn a task if there is some deterministic — meaning non-random — function mapping from the inputs x to the outputs y, and the labels are consistent with this function. If you can engineer a dataset like this, you can often get things to work with higher accuracy and on a smaller data set. Say, for instance, you’re trying to help a pharmaceutical company inspect pills to find defects, like scratches, and you label the defects through visual examination, like this:

If you were to plot scratch length against whether or not there’s a defect, this dataset looks pretty inconsistent — y does not appear to be a deterministic function of x. You actually see this all the time, even with expert inspectors, because they will often disagree with each other. If you instead sort all the images in order of increasing scratch length and re-label the images so that the ones above a certain threshold are labeled as a scratch, you get a much more consistent dataset. Changes to the data like this make it easier for the learning algorithm to make accurate predictions.

Tip 2: Use multiple labelers to spot inconsistencies

If you suspect that labelers are inconsistent in how they’re annotating the data, it is helpful to ask two labelers to label the same example. If the first labeler annotates a defect as a chip while the second labeler annotates it as a scratch, you know there’s at least one inconsistency in your dataset. Other common inconsistencies in vision applications include bounding box size, number of bounding boxes, label names, etc. If you’re able to identify the inconsistencies and clean them up, your algorithm’s performance will significantly improve.

Tip 3: Repeatedly clarify labeling instructions by tracking down ambiguous examples

When you are trying to streamline your data labeling process, identifying ambiguous examples can help you clarify instructions for how to label edge cases. Your labeling instructions are your single source of truth, and the instructions should be labeled with examples of the concepts. Show some examples of obviously scratched pills, as well as examples of borderline cases and near-misses and any other confusing examples in these instructions. It is important that you actively hunt for labels that are either ambiguous or inconsistent — like those shown in tip 2 above — then provide a definitive labeling decision in your documentation. Remember: A decision is always better than no decision. This labeling instructions document, when created properly, significantly increases label quality.

Tip 4: Toss out bad examples. More data is not always better!

If you were given the six pill examples (shown on the left) and asked to train the learning algorithm to detect defects on pills, you might only be confusing your learning algorithm since the data quality is so poor. Instead, if you spotted that some of the images were poorly-focused or had bad contrast, you should toss them out. Even if this leaves you with only half the training set, your algorithm will be able to more clearly detect the defect you are looking for.

In many applications, it is also critical to improve the imaging system design. In the case of this example, I would go back to the person that had designed the imaging system and politely request to refocus the camera and adjust the lighting or contrast. You’ll find you get a much better result this way. Sometimes, the path to a successful application is not through taking bad data and working on it; it can be much more efficient to fix the image acquisition to get better images and make the problem easier for the algorithm.

Tip 5: Use error analysis to focus on subset of data to improve

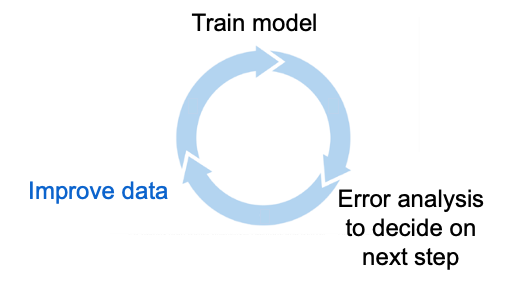

Oftentimes when you’re given a dataset, there are often so many different aspects of the data that all need work, whether that be cleaning up labels, setting thresholds, capturing better data, etc. Trying to improve all these different aspects of the data all at once can be too broad and unfocused an effort. Instead, repeatedly using error analysis is critical to deciding what part of the learning algorithm’s performance is unacceptable or which you wish to improve.

For example, if you decide that the performance is not good enough on scratches, this allows you to focus attention on the subset of images with scratches.

Summary:

One of the common misconceptions about Data-Centric AI is that it’s all about data pre-processing. It is actually about the iterative workflow of developing a machine learning system, in which we improve the data over and over again. One of the most powerful ways to improve your AI system is to engineer the data to fix the problems identified by error analysis, and try training the model again.

Here is a recap of my top Data-Centric AI tips:

Tip 1: Make the labels y consistent

Tip 2: Use multiple labelers to spot inconsistencies

Tip 3: Clarify labeling instructions by tracking down ambiguous examples

Tip 4: Toss out noisy examples. More data is not always better!

Tip 5: Use error analysis to focus on subset of data to improve

I hope you can apply these principles to the AI systems you are working on and share them with others as well!