I’m sure—like myself and millions of others—you’ve spent hours copying and pasting the same information into job applications 🥱🎯.

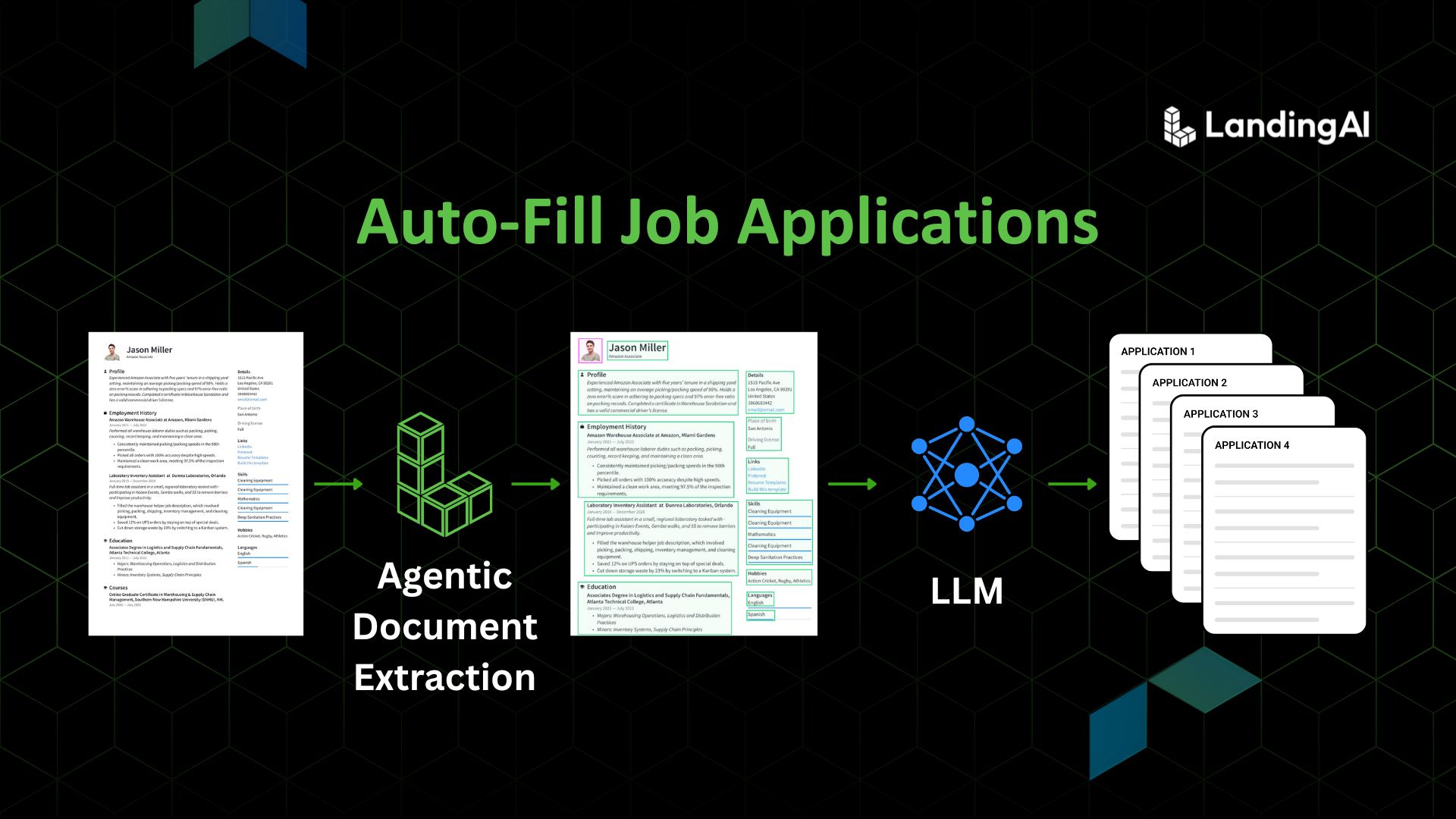

In this tutorial, we’ll walk through how you can build a powerful automation tool that extracts key data from your resume and fills out LinkedIn job applications for you—automatically! Thanks to LLMs, “developer” is now a title accessible to nearly everyone. What an amazing time to be alive! 🌍✨. You can now leverage the coding superpowers of LLMs in combination with LandingAI’s Agentic Document Extraction (ADE) to create your own intelligent auto-apply tool. ADE handles even the most complex resume layouts with ease — so you can eliminate repetitive form-filling and redirect your energy toward what actually matters— understanding job requirements, tailoring your applications, and positioning yourself as the ideal candidate 💪.

Let’s dive in! 🚀

Quick Overview (What You’ll Learn)

In this tutorial, you’ll:

- Build a Django application that parses resume PDFs with high accuracy using LandingAI’s Agentic document extraction (ADE) .

- Quick recap of ADE and why accuracy matters. Remember – if you can’t get correct data from your resume’s complex layouts to begin with, you can’t build further.

- Create an automation script with Playwright and OpenAI’s Computer-Use API to fill LinkedIn job applications

- Brainstorm further how to potentially extend this foundation to build more

The Problem: Time Wasted on Repetitive Tasks

The typical job application process involves repeatedly entering the same information across multiple platforms. As AI enthusiasts, we know this is exactly the kind of task AI should handle for us. By automating the mundane parts of job applications, we can focus on the aspects that require human intelligence and creativity – AI is here to make us not only more productive but also more creative 🙂

Quick Recap of Agentic Document Extraction

The LandingAI Agentic Document Extraction (ADE) API pulls structured data out of visually complex documents—think tables, diagrams, form fields, and charts—and returns a hierarchical JSON with exact element locations.

You might wonder, what’s agentic in this, and how is it new? Document intelligence has been around for a while. OCR has existed for decades, and multimodal LLMs have recently been used for document extraction. But can you really trust them to reliably extract information from your documents? Every year, trillions of dollars are lost due to downstream errors in document processing—errors that stem from inaccurate document understanding and extraction in the first place.

That’s why, at LandingAI, we worked hard to address this problem with a fresh approach. The solution lies in an agentic method of extraction—one that incorporates visual reasoning and fine-grained spatial localization.

In the context of ADE, the “Agentic” approach means taking a complex problem, breaking it into smaller parts, and applying reasoning to examine and connect these components to solve the overall task. ADE uses exactly this strategy for document extraction. Instead of passively reading text, it decomposes the extraction task into subtasks, applies reasoning, and assembles the results for accurate output.

Unlike traditional OCR methods that focus solely on text extraction, ADE was built from the ground up to interpret documents as visual representations of information. After all, document layouts were originally designed for human readers—not for OCR engines or LLMs. Large multimodal models often struggle with the fine-grained spatial localization needed for accurate document extraction, especially in complex domains like finance, healthcare, and beyond.

If you are interested to read more, you can check out my post comparing LLM+OCR-based approaches with ADE.

Incorrect information hurt your already competitive chances of getting calls from recruiters. This is why accuracy of extraction is important here. Now you know why it made sense to take a moment to recap ADE and highlight our focus on accuracy 😉.

Building the Solution

Step 1: Understanding Project Structure

Our application follows this organized structure:

job_application_automator/

├── users/ # User authentication and profile management

│ ├── views.py # User-related views

│ ├── models.py # User models and custom auth

│ ├── forms.py # Forms for user authentication and registration

│ ├── admin.py # Admin interface customization

│ └── urls.py # URL routes for the user app

├── doc_parser/ # Resume parsing and LinkedIn automation

│ ├── views.py # Handles file uploads and parsing

│ ├── models.py # Stores parsed resume data

│ ├── utils.py # Helper functions (LandingAI and OpenAI integration)

│ ├── operator_script.py # Core automation script for LinkedIn (Playwright + OpenAI)

│ ├── linkedin_script.py # Additional LinkedIn automation utilities

│ └── urls.py # URL routes for resume parsing

└── templates/ # HTML templates for user interactions

├── users/ # Templates related to user activities (login, registration)

└── resume_parser/ # Templates related to resume parsing and display

Step 2: Parse Resume Data with LandingAI Agentic Document Extraction Python Library

We’ll use LandingAI’s agentic document extraction python library to convert unstructured resume PDFs into structured data:

pip install agentic-doc

The initial parsing code in doc_parser/utils.py:

from agentic_doc

import json

import openai

def extract_resume_data(file_path):

"""

Parse resume and return structured data

"""

# First, use LandingAI's ADE to extract markdown

resume_markdown = parse(file_path)

# Then enhance with GPT-4-turbo for structured JSON

client = openai.OpenAI(api_key=settings.OPENAI_API_KEY)

prompt = f"""

Convert this resume markdown into structured JSON:

{resume_markdown}

Format as JSON with fields: name, email, phone, skills, experience, education.

"""

response = client.chat.completions.create(

model="gpt-4-turbo",

messages=[

{"role": "system", "content": "Extract structured resume data from markdown for use in job portals and databases."},

{"role": "user", "content": prompt}

])

structured_data = response.choices[0].message.content.strip()

structured_json = json.loads(structured_data)

return structured_json

except Exception as e:

print(f"Error parsing resume: {e}")

return None

This two-step process ensures we get highly structured, reliable data:

- ADE extracts the raw content from PDFs

- GPT-4-turbo converts the markdown into clean, structured JSON

Step 3: Build the LinkedIn Automation with OpenAI’s Computer-Use API

The core automation component in doc_parser/operator_script.py leverages OpenAI’s Computer-Use API for intelligent browser interaction:

from playwright.sync_api import sync_playwright

import openai

import time

from django.conf import settings

class LinkedInAutomator:

def __init__(self):

self.client = openai.OpenAI(api_key=settings.OPENAI_API_KEY)

self.tools = [{"type": "browser"}]

def computer_use_loop(self, browser, page, response):

"""Process and execute browser automation commands from OpenAI"""

while response.choices[0].message.tool_calls:

for tool_call in response.choices[0].message.tool_calls:

# Execute browser commands

if tool_call.function.name == "browser":

# Process browser commands...

pass

# Get next steps from OpenAI

response = self.client.chat.completions.create(

model="computer-use-preview",

messages=[# Include conversation history],

tools=self.tools

)

return response

def apply_to_job(self, job_url, resume_data, credentials):

"""Automate LinkedIn job application using parsed resume data"""

with sync_playwright() as p:

browser = p.chromium.launch(headless=False)

page = browser.new_page()

# Login to LinkedIn using OpenAI Computer-Use API

login_prompt = f"""

Navigate to LinkedIn login page (<https://www.linkedin.com/login>).

Enter email: {credentials['email']}

Enter password: {credentials['password']}

Click the Sign In button.

Wait for the page to load completely.

"""

response = self.client.chat.completions.create(

model="computer-use-preview",

messages=[{"role": "user", "content": login_prompt}],

tools=self.tools,

reasoning={"generate_summary": "concise"},

truncation="auto"

)

response = self.computer_use_loop(browser, page, response)

# Navigate to job posting and apply

apply_prompt = f"""

Navigate to this job URL: {job_url}

Look for and click the "Easy Apply" button.

Fill out the application form with:

- Name: {resume_data['name']}

- Email: {resume_data['email']}

- Phone: {resume_data['phone']}

If there are additional fields for experience or education, fill those too.

Click "Submit application" or "Continue" buttons as needed.

"""

response = self.client.chat.completions.create(

model="computer-use-preview",

messages=[{"role": "user", "content": apply_prompt}],

tools=self.tools,

reasoning={"generate_summary": "concise"},

truncation="auto"

)

response = self.computer_use_loop(browser, page, response)

# Process results

browser.close()

return {"status": "success", "message": "Application submitted"}

Automating LinkedIn Interactions (Computer-Use API)

The app leverages OpenAI’s “computer-use-preview” model to automatically interact with LinkedIn:

- Natural-language driven automation: Simply provide clear instructions to navigate pages, input credentials, and submit forms. The API interprets these instructions and interacts with the web browser using Playwright.

- Adaptive interaction: The API can handle dynamic elements and unexpected prompts that traditional automation scripts might struggle with.

The Real Value: Focus on Meaningful Work

This automation framework handles the tedious part of job applications, but that’s just the beginning. The real opportunity is to use the time you’ve saved to focus on what AI can’t do as well: strategically positioning yourself for each role.

With the time saved from automation, you could develop features that:

- Extract key requirements from job descriptions

- Compare them against your skills and experience

- Generate tailored cover letters highlighting relevant accomplishments

- Suggest resume modifications to emphasize matching qualifications

LinkedIn is Clever

LinkedIn has bot detection system that won’t allow you to use computer use to keep applying to job applications so you’ll need to figure out how to deal with it (next potential steps for you to build further maybe?) but this workflow should work well with a lot of other job application platforms.

Extending the Application – Your creativity is the ceiling

This automation framework is just the starting point. Once you’ve built the foundation, there’s so much more you can do to make your job application process smarter, faster, and more personalized.

Here are some ideas to take this app even further:

Tailored Cover Letter Generation: Use the extracted job description and resume data to generate custom cover letters that reflect the language and priorities of each job posting.

Smart Resume-Job Matching: Automatically compare your resume against job descriptions and highlight the most relevant skills or gaps. You could even get suggestions on what to tweak in your resume to better match each role.

This is the kind of project where every additional hour you put in pays off by saving dozens later—and more importantly, gets you closer to opportunities that truly fit you. So go ahead—experiment, remix, and build what you wish you had when you first started applying!

Conclusion

Accurate extraction from documents—regardless of layout complexity and while preserving key visual elements—enables us to reliably automate the repetitive and tedious parts of job applications. By combining this capability with advanced APIs like OpenAI’s Computer-Use, we’ve built a powerful automation system that takes care of the busywork, letting you focus on what truly matters: standing out as the best candidate.

What’s Next?

- Explore and build further using the code for the tutorial available on GitHub

- Start building with – LandingAI Agentic Document Extraction Python Library

- Read these related blog posts – Going Beyond OCR+LLM: Introducing Agentic Document Extraction , Turbocharge Document Understanding Apps with Landing AI’s Agentic Document Extraction

- Jam with our Developer Community on Discord

Remember: The most valuable automation isn’t just about saving your time—it’s more about enabling you to invest that time in activities that truly matter to you!